- SnapLogic - Integration Nation

- Designing and Running Pipelines

- Add incoming document to new file row

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-17-2022 06:26 AM

We want the ability to write a document to a new row within a CSV file. This is only if the document meets certain requirements

Is this possible?

This article from a few years back suggests not and wondered if there has been any advancements

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-20-2022 09:45 AM

Hello, here’s an update.

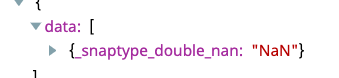

I found this article and used the expression to update the one on my Mapper snap. The resulting dat structure in the target path is the desired outcome!

This is great stuff so far. I’ll probably take a break now from testing and have some dinner before recommencing tomorrow. Thank you @j.angelevski for your patience.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-17-2022 06:36 AM

Hi @NAl,

You can write a new row in the file ( I suppose you want “append” ) but that’s supported only for FTP, FTPS and SFTP file protocols.

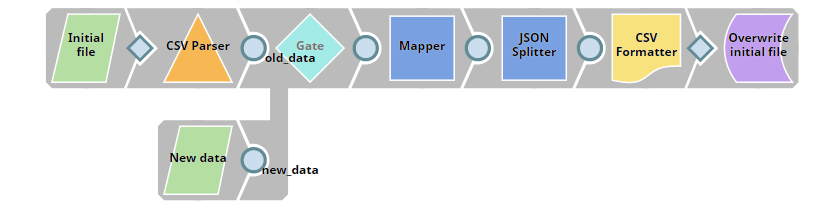

If you want to replicate this sort of logic, you can follow these steps:

- Create empty csv file ( not required )

- Read this csv file at the start of the pipeline even if it’s empty.

- Read the new data from the other source.

- Use a “Gate” snap to combine both inputs.

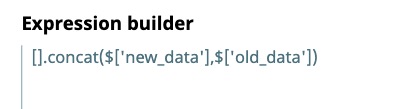

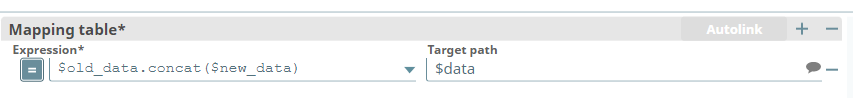

- Concatenate the input that contains the new data with the old data with a Mapper with the following expression:

$old_data.concat($new_data)

- Split the data with the newly concatenated data.

- Overwrite the initial file.

In this case, your new data will be always appended.

The pipeline should look something like this:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-19-2022 07:34 AM

Thank you for providing a detailed outline. I’ve been away implementing this and looking at the right expressions to use to split/concatenate the data. I’ve managed to put the pipeline together however the Mapper snap isn’t returning any data based on the expression provided:

This is a copy of the JSON message before it goes into the Mapper. There is currently no data in the csv file hence the blank message on input0

[

{

“input0”: [

],

"input1": [

{

"A": "X",

"B": "X",

"C": "60",

"D": null,

"E": "X",

"F": "",

"G": "X",

"H": "X",

"I": "1320159",

"J": 1430047,

"K": "X",

"L": "X",

"M": "X",

"N": "X",

"O": false

}

]

}

]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-20-2022 12:26 AM

If input0 contains the new data in your pipeline, you should write the following expression:

$input1.concat($input0)

This is working fine for me. Result:

[

{

"A":"X",

"B":"X",

"C":"60",

"D":null,

"E":"X",

"F":"",

"G":"X",

"H":"X",

"I":"1320159",

"J":1430047,

"K":"X",

"L":"X",

"M":"X",

"N":"X",

"O":false

}

]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-20-2022 03:00 AM

Okay so we’re getting there.

I’ve re-configured the Mapper and applied a few other changes but now the pipeline is returning an error:

Stacktrace: Metadata failed to load for ‘insert file location here’

Resolution: Check for URL syntax and file access permission

I read another article on here and wonder if it has something to do with setting up an Account in the File Reader Snap using Basic Auth?

The file is situated in a folder in SnapLogic under my username.

- Saving Excel document from zip file gives unexpected results in Designing and Running Pipelines

- how to Create an expression library file with the given Source Folder Location. in Designing and Running Pipelines

- Error in the Snaplogic Mapper for excel Merged fields in Designing and Running Pipelines

- Upload new Version to SalesForce ContentVersion in Designing and Running Pipelines

- Writing output file from response from HTTP client in Designing and Running Pipelines