- SnapLogic - Integration Nation

- Designing and Running Pipelines

- Exporting text file with CSV formatting, but with ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Exporting text file with CSV formatting, but with different numbers of fields

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-01-2022 06:23 AM

I’m working on a pipeline that needs to create and send a text file to a third party

That text file contains rows formatted in the same was as a csv file

The file contains a header row, then the data rows, then a footer row

The header and footer rows only have 6 columns, and I cannot send it with more or less columns, because their system will reject it

the data rows have 33 columns, and I cannot send it with more or less columns, because their system will reject it

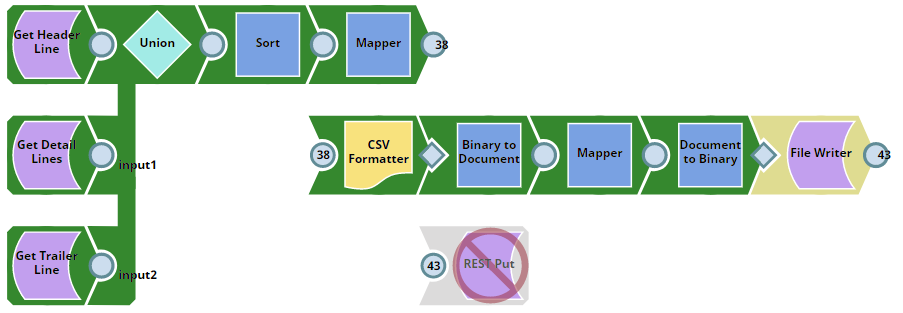

Here is my pipeline:

The first section has 3 SQL Server Execute snaps that get the 3 types of rows, then I union them all together. The select contains 2 fields that cannot be in the resulting text file, and I only need them for the sort snap, to get the rows in the correct order

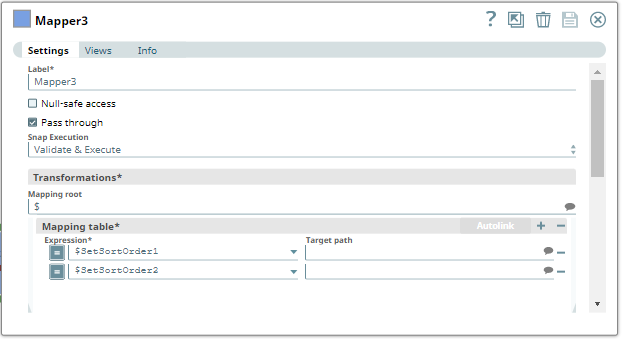

The mapper after to Sort snap is to remove the 2 sort columns

The problem I get here is, if I leave null safe access unchecked, then it freaks out because Col6 to Col33 does not exist in 2 of the rows, and if I check null safe access, it creates 6 to 33 in those 2 rows and adds to many fields to those 2 rows in the text file

Is there any way to:

A) Remove the 2 fields without using a mapper

B) Remove the resulting null valued fields after the mapper

OR

C) Tell the csv formatter to not create the field if it has a null value

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-01-2022 06:45 AM

I’ve tried changing the mapper to do this instead:

to strip out the 2 fields, and it does do it, but in the resulting text file the header row has only the 6 records I want, but the footer row has 33, presumably taking the additional columns from the preceding data row

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-01-2022 06:51 AM

I’ve checked the JSON document coming out of the mapper, and the final row only has 6 columns, so its the csv formatter or something after that is adding the extra columns to the last row

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-01-2022 06:51 AM

@RoyB - here is one solution. This will work well unless the output file becomes very large since it combines the data to memory for output to the file.

Header_Body_Footer_to_File_2022_03_01.slp (12.4 KB)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-01-2022 07:07 AM

Thanks for the try, but it didn’t work.

I modified my pipeline to use your method, and it does the same thing.

The incoming document has a format of:

6 columns

33 columns (multiple rows)

6 columns

And then somewhere between the csv formatter and the File Write it turns into

6 columns

33 columns (multiple Rows)

33 columns

- XML FILE Formatting issue in Designing and Running Pipelines

- Export All Pipeline Information in Designing and Running Pipelines

- Different Date standardization into a format in Designing and Running Pipelines

- Remove the cookie from the response of HTTP Get while writing a file in Designing and Running Pipelines

- Parse complex file format and generate sequence number in Designing and Running Pipelines