- SnapLogic - Integration Nation

- Designing and Running Pipelines

- How to improve pipeline performance

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to improve pipeline performance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-18-2017 11:38 AM

Hi,

I am reading multiple files from a S3 location and each file has multiple JSON records. I have to look for an id value in each JSON record and based on the id value, I have to create an individual JSON file for a single record and write it to a specific directory in S3. My pipeline is running fine but its performance is no that good for eg: to process 474 documents which has total 78,526 records , it took 2:30hr to wrote 78,526 files in another S3 directory which I believe is not good.

I am attaching my pipelines, if any improvement can be done in the pipeline please let me know. I really appreciate your suggestion.

This is the flow of my pipelines:

pl_psychometric_analysis_S3_child.slp → pl_psychometric_analysis_S3_split_events.slp → pl_psychometric_analysis_S3_write_events.slp

Thanks

Aditya

pl_psychometric_analysis_S3_child.slp (16.4 KB)

pl_psychometric_analysis_S3_split_events.slp (9.4 KB)

pl_psychometric_analysis_S3_write_events.slp (7.8 KB)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-18-2017 12:18 PM

Replace your two scripts with mappers should net you some performance gain. You don’t need to use a script to make a string of json.

Script in “Split events” pipeline which is doing

data = self.input.next()

newData = {}

newData[‘record’] = data

newData[‘sequence_id’] = data[‘sequence’][‘id’]

newData[‘event_id’] = data[‘event_id’]

self.output.write(newData)

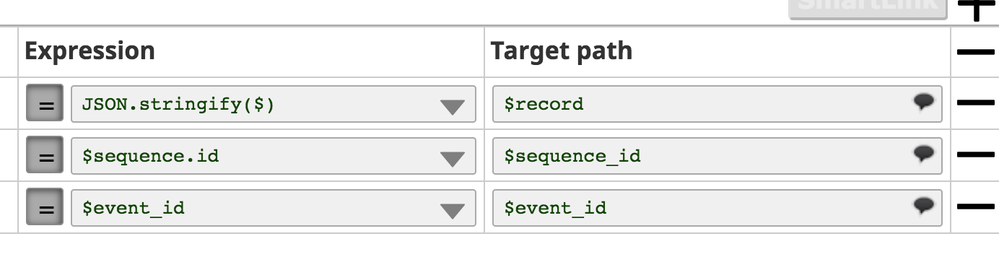

replace with a mapper like

Script in write events is like

data = self.input.next()

self.output.write(data[‘record’])

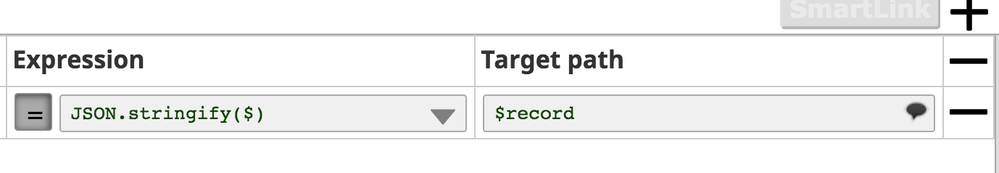

replace with a mapper like

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-18-2017 01:43 PM

Thanks Dwhite

I’ll make that change, but I checked the average execution time for both the scripts , it’s very less (0.141 sec).

Also, how should we decide what to set in pool size property of Pipeline Execute snap.

Thanks

- Need to know all the columns in input pipeline using expression in mapper snap in Designing and Running Pipelines

- Performance Improvement - Loading large CSV files to Snowflake in Designing and Running Pipelines

- Launched: Oracle bundled JDBC driver upgrade in Release Notes and Announcements

- How to access the expression library loaded in the parent pipeline from a child pipeline in Designing and Running Pipelines

- Daily Task Metrics in Designing and Running Pipelines