- SnapLogic - Integration Nation

- Patterns

- Ingest data from SQL Server (RDBMS) to AWS Cloud S...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Ingest data from SQL Server (RDBMS) to AWS Cloud Storage (S3)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-14-2019 03:19 PM

Contributed by @SriramGopal from Agilisium Consulting

The pipeline is designed to fetch records on an incremental basis from any RDBMS system and load to cloud storage (Amazon S3 in this case) with partitioning logic. This use case is applicable to Cloud Data Lake initiatives.

This pipeline also includes, the Date based Data Partitioning at the Storage layer and Data Validation trail between source and target.

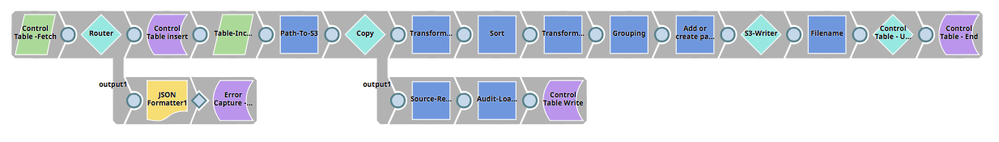

Parent Pipeline

Control Table check : Gets the last run details from Control table.

ETL Process : Fetches the incremental source data based on Control table and loads the data to S3

Control Table write : Updates the latest run data to Control table for tracking

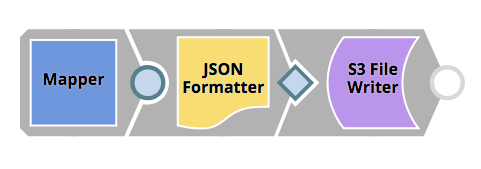

S3 Writer Child Pipeline

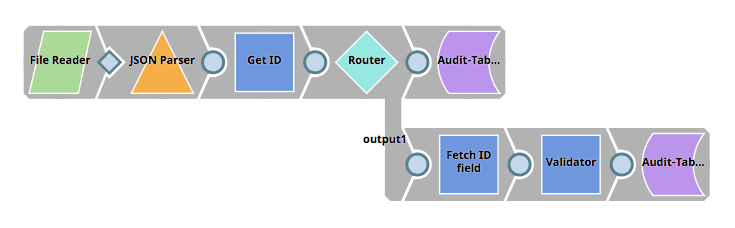

Audit Update Child Pipeline

Control Table - Tracking

The Control table is designed in such a way that it holds the source load type (RDBMS, FTP, API etc.) and the corresponding object name. Each object load will have the load start/end times and the records/ documents processed for every load. The source record fetch count and target table load count is calculated for every run. Based on the status (S-success or F-failure) of the load, automated notifications can be triggered to the technical team.

Control Table Attributes:

- UID – Primary key

- SOURCE_TYPE – Type of Source RDBMS, API, Social Media, FTP etc

- TABLE_NAME – Table name or object name.

- START_DATE – Load start time

- ENDDATE – Load end time

- SRC_REC_COUNT – Source record count

- RGT_REC_COUNT – Target record count

- STATUS – ‘S’ Success and ‘F’ Failed based on the source/ target load

Partitioned Load

For every load, the data gets partitioned automatically based on the transaction timestamp in the storage layer (S3)

Configuration

Sources : RDBMS Database, SQL Server Table

Targets : AWS Storage

Snaps used :

- Parent Pipeline : Sort, File Writer, Mapper, Router, Copy, JSON Formatter, Redshift Insert, Redshift Select, Redshift - Multi Execute, S3 File Writer, S3 File Reader, Aggregate, Pipeline Execute

- S3 Writer Child Pipeline : Mapper, JSON Formatter, S3 File Writer

- Audit Update Child Pipeline : File Reader, JSON Parser, Mapper, Router, Aggregate, Redshift - Multi Execute

Downloads

IM_RDBMS_S3_Inc_load.slp (43.6 KB)

IM_RDBMS_S3_Inc_load_S3writer.slp (12.2 KB)

IM_RDBMS_S3_Inc_load_Audit_update.slp (18.3 KB)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-21-2019 03:49 PM

Do you have a similar pipeline/pattern that Ingests from SQL Server to Snowflake?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-21-2019 10:08 PM

we can able to create it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-21-2019 03:57 PM

I’ll looking to see if we have any in the queue to upload, but I don’t think there is one yet.

You may be able to combine part of this pipeline with a part of Move data from AWS S3 to Snowflake Data Warehouse to get you close to what you want.

Diane Miller

Community Manager

- Using JWT to Authenticate to Box from SnapLogic in Designing and Running Pipelines

- Ingest data from NoSQL Database (MongoDB) into AWS Cloud Storage (S3) in Patterns

- Ingest data from Salesforce application into AWS Cloud Storage (S3) in Patterns

- Ingest data from File Server (FTP/sFTP) into AWS Cloud Storage (S3) in Patterns