- SnapLogic - Integration Nation

- Designing and Running Pipelines

- Reading and parsing multiple files from s3 bucket

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Reading and parsing multiple files from s3 bucket

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-07-2022 09:39 AM

Hey All,

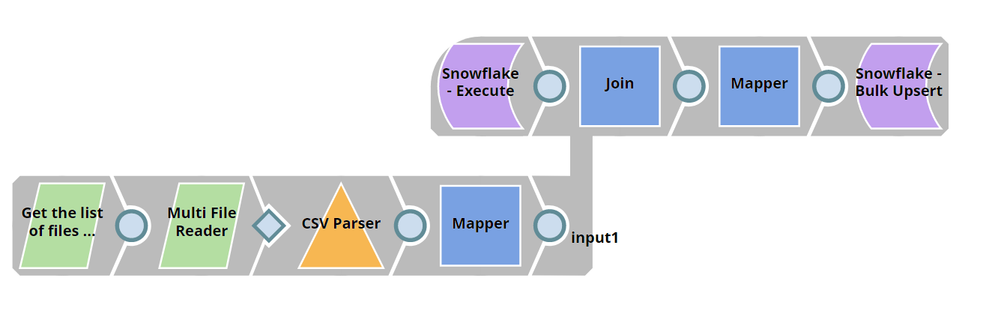

So I’ve been able to read a single csv file from my s3 bucket, parse it with csv parser, map it, and load it into my snowflake DB with some additional join logic. However, what I need to do now is repeat this multiple times for multiple files in the s3 bucket.

Ie, s3 bucket has 3 files. for each file, it would go through this same process

I’ve tried using the multi file reader but not sure how to make it go through all the files? Or does it do it automatically. It works if I have only a single file in my s3 bucket, just need it to work for all the files now. Not sure if the parsers/mappers will automatically run for multiple files

Here is my pipeline setup

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-07-2022 10:07 AM

Hey @amubeen,

I think you’re on the right track here. I would go with Directory browser first, to get a list of files that you want to process, than a File Reader to actually read the files. The file reader will be called as many times as there is files in that directory.

In your case, I think it’s safe to keep everything on the same level. For other cases, where you need to store separate files, the way you are reading them, than you should use pipeline execute.

Let us know if you need more help,

Regards,

Bojan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-07-2022 10:20 AM

Would I use a single file reader in that case or a multi file reader? Wasn’t sure about what the difference was

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-07-2022 10:22 AM

Single. But because directory browser will produce multiple documents, the file reader will be called as many times as there will be documents on input.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-10-2022 01:40 AM

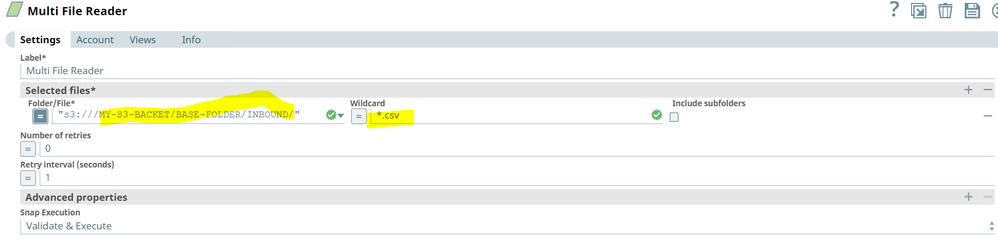

@amubeen hi… if you want to use Multi File Reader , you can config like below. But make sure all of your file should adhere same format (same set of header) else record can be mismatched. You can use filename convention in wildcard set.

- Slicing Data from JSON in Designing and Running Pipelines

- SnapLogic Metadata Read - Parsing Question in Designing and Running Pipelines

- split csv data into multiple small files without parsing the csv data in Designing and Running Pipelines

- How to split a large binary file into multiple small csv files without parsing the data in Designing and Running Pipelines

- NetSuite Update SNAP updating unchanged fields in Designing and Running Pipelines