- SnapLogic - Integration Nation

- Designing and Running Pipelines

- Snowflake Bulk Load

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Snowflake Bulk Load

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-24-2020 11:04 PM

Hi,

I am trying to use Snowflake bulk load snap to read data from external S3 location and load into the snowflake table.

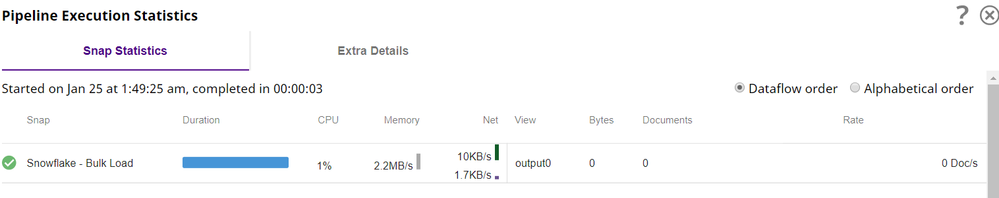

here the challenge is that. Under the Snowflake account, I have mentioned the external bucket name and provided keys. but when am running the pipeline I see that 0 records loaded.

Note:- Snowflake is on the AWS account, data is on another AWS account. If I use the S3 file reader snap. am able to see the data

Can anyone help me with this?

Error:-

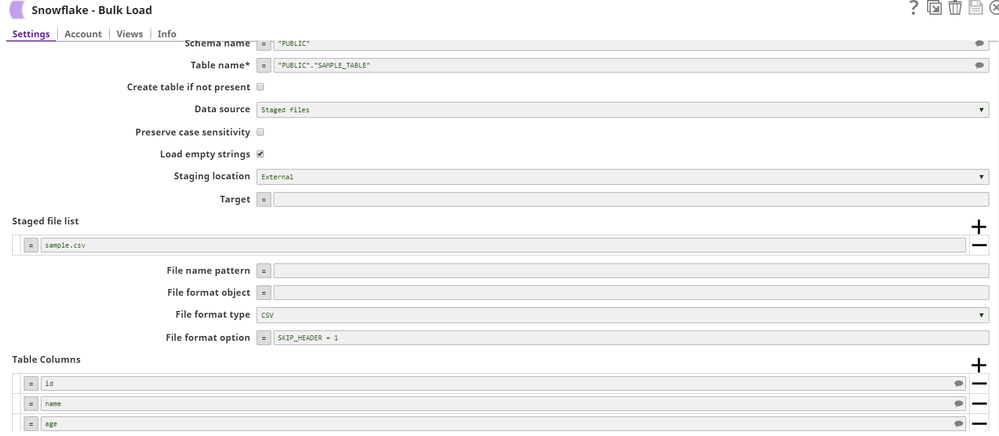

Bulk load snap settings:-

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-04-2020 03:00 PM

@venkat

I would be interested to see if there are any command executed on the Snowflake History Console after executing the Bulk Load Snap. I understand that 0 records are loaded but the pipeline did not fail from your screenshot, let me know if that is the case.

I will also interested to see if you can use the s3 file reader as an upstream snap to Snowflake Bulk load, having the data source as input view. The second option will be very slow but will be good to know if there are data issues involved.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-04-2020 03:19 PM

Yes, pipeline ran successfully but data is not loaded. I am able to load data into table using s3 read and then snowflake insert snap which i am trying to avoid .

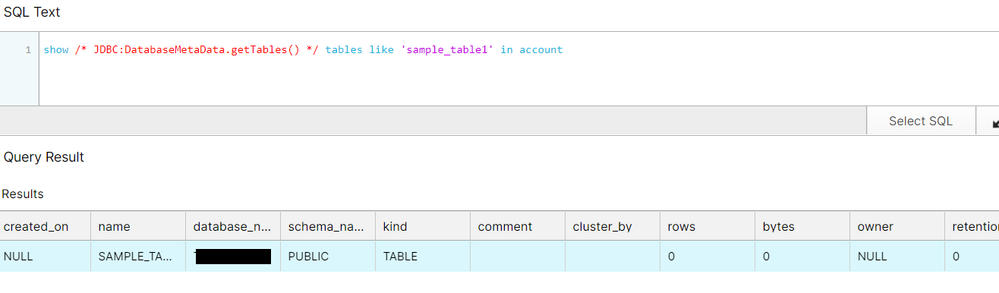

As per the Snowflake History:-

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-04-2020 03:57 PM

After reading the data from s3 read, Instead of Snowflake Insert Snap, you can use the Snowflake Bulk Load Snap and select the Data Source as Input view. I would like to know if the Bulk Load snap is able to process that data.

Seems like the History doesn’t have any bulk api executions (“copy into”). can you attach your pipelines after removing the sensitive data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-04-2020 04:08 PM

I took a look at the pipeline. The root cause of the issue seems to be the case sensitivity of the column headers. Can you sync up with the support contact, Eric on this.

- Snowflake SCD2 - malformed date issue in Designing and Running Pipelines

- Issue with Snowflake - SCD2 snap in SnapLogic in Designing and Running Pipelines

- SCD2 Snap: Potential limitation in "dot" notation naming of columns in Snowflake in Designing and Running Pipelines

- SCD2 Snap: Not able to handle dot notation columns in Snowflake in Designing and Running Pipelines

- Decrypt a column and load it to Snowflake table in Designing and Running Pipelines