- SnapLogic - Integration Nation

- Prior Entries

- Kaplan: Centralizing Data in a Redshift Data Wareh...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Kaplan: Centralizing Data in a Redshift Data Warehouse

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-25-2022 05:13 PM

What were the underlying reasons or business implications for the need to automate business processes?

Kaplan’s businesses provide individuals, educational institutions, and businesses a broad array of services, supporting our students and partners to meet their diverse and evolving needs throughout their educational and professional journeys. Our Kaplan culture empowers people to achieve their goals. Committed to fostering a culture of learning, Kaplan is changing the face of education.

In order to support Kaplan’s changing business data needs, we were looking for a platform that can help us to ingest the data to our Redshift Data Warehouse as quickly as possible. Some of the challenges that we had that implicated the need to automate our business needs

- Staff used to write custom scripts to pull the data from source systems and all these script followed a different style based on the developer coding it, it lacked standards.

2.Debugging and understanding was so tough. Our staff hours are spent mostly on understanding the code rather than doing any development. - The business had to wait atleast 3 weeks time to see the data they want.

- Users also want to control on when to run the data pipelines and we had no wa to provide users with an interface to call the data pipelines.

- Data updates quirements as quickly as possible ranging from 1 hour to daily, weekly & monthly schedules.

- No Documentation of the process

- Delay in Time to Completion

- Each team duplicated the process of ingesting the same data resulting in erroneous results

Describe your automation use case(s) and business process(es).

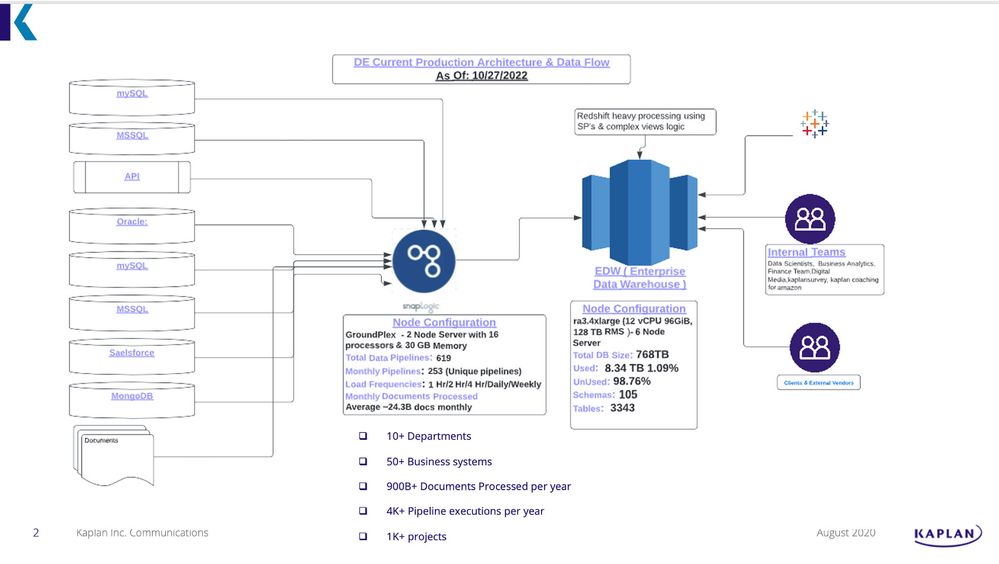

Kaplan wanted to have all its data to be centralized in Redshift DWH so that each team don’t need to develop separate pipeline to get the data and the data is fully governed so that people have full trust on the data.In order to achieve this Objective Kaplan selected SnapLogic Integration Platform after reviewing few other ETL tools. Using SnapLogic Kaplan was able to achieve it’s Data Integration Objective to centrally get all of Enterprise Data within a period of 6 months from the time of getting the initially License of the Product.

With the help of Snaplogic, we were able to follow standards to data pipeline and staff was able to learn it quickly due to its GUI and easy of use with intuition. The learning curve even for new team members has reduced dramatically from 5~6 weeks to 1 week.

Snaplogic has reduced our development time tremendously. We are able to complete the development in 2-3 days and be able to ingest the data that users want. Snaplogic also provides API that can be used by users to trigger the pipeline.

Describe how you implemented your automations and processes.

All our data pipelines follow the standard logic of parent pipeline calling the child pipeline. We give all the parameter values in a job parameter table and when the snap’s run, first step is to get the values from parameter table and process the required/changed data only for any particular day. This has reduced processing time tremendously. We pretty much use most of the snaps that are available, on top of that we had a request to get Zuora data and we used Zuora snap to get that data. Few snaps that we use regularly are PostgresSQL, Google BigQuery, DynamoDB, MongoDB, Google Spreadsheet, Redshift, SFDC, Oracle, SQL etc

What were the business results after executing the strategy?

The business is extremely happy about the speed of data ingestion and data availability. Productivity Improvement : 90+% , Speed Improvement: 20+0%, %reduced manual inefficiencies : 50%

Who was and how were they involved in building out the solution?

Data Engineers were involved in building out the solution, a total of 5 DE were able to create 610 Data pipelines very quickly and easily.

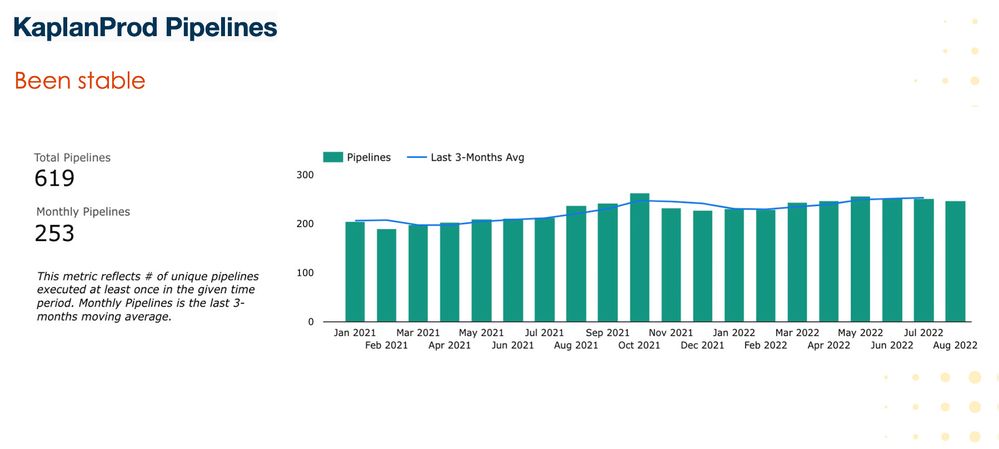

Key Metrics Total Pipelines: 610, Monthly Pipeline Runs: 253

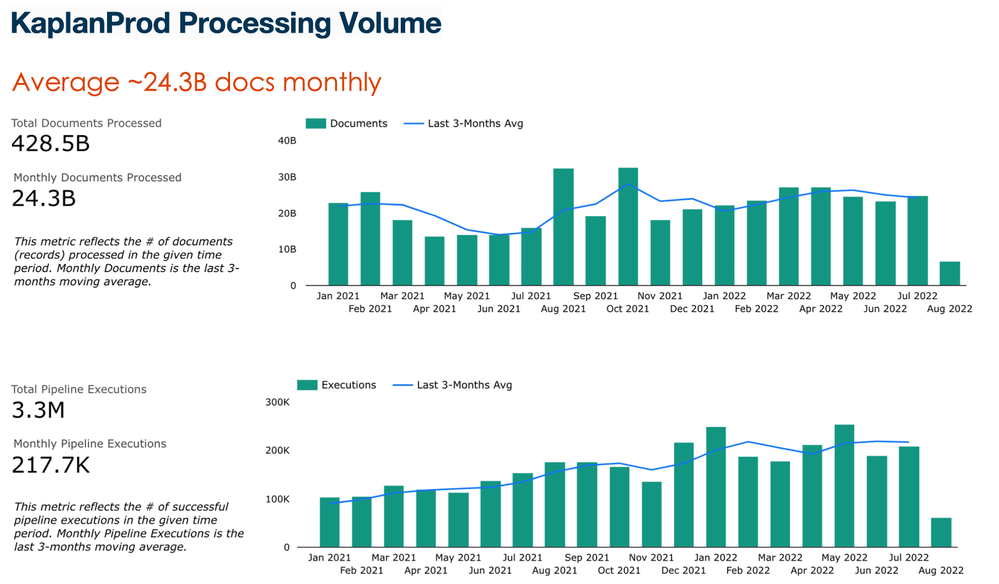

Total Documents Process: 450 B, Average of ~25 Billion Documents are processed monthly

Total Pipeline Executions : 3.3 M Monthly Pipeline Executions: 220K

Anything else you would like to add?

Diane Miller