- SnapLogic - Integration Nation

- SnapLogic Technical Blog

- Oracle to Redshift Migration Using Generative Inte...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The question was, "can SnapLogic move 30 terabytes of data from Oracle to Redshift and how long would it take?" This question came from a current customer who asked for some performance numbers. To fulfill this request we needed to spin up an Enterprise Edition of Oracle RDS to use as the source; we have a pre-sales instance of Redshift we used as the target.

My teammate Matt Sager had just completed a similar request for one of his accounts. Unfortunately the target for his customer was different. We got together for a Zoom meeting to do the setup and in a couple of hours we had this done. Most of the time was setting up the new RDS instance and making sure all the account permissions worked. We dropped in the Redshift Snap and had a test running in minutes. And since each run took less than 7 minutes we were able to optimize it quickly.

SnapLogic has a demo instance of the standard edition of Oracle RDS, but for this test we wanted to use Enterprise Edition. The default configuration is larger and we wanted the additional capacity for the tests.

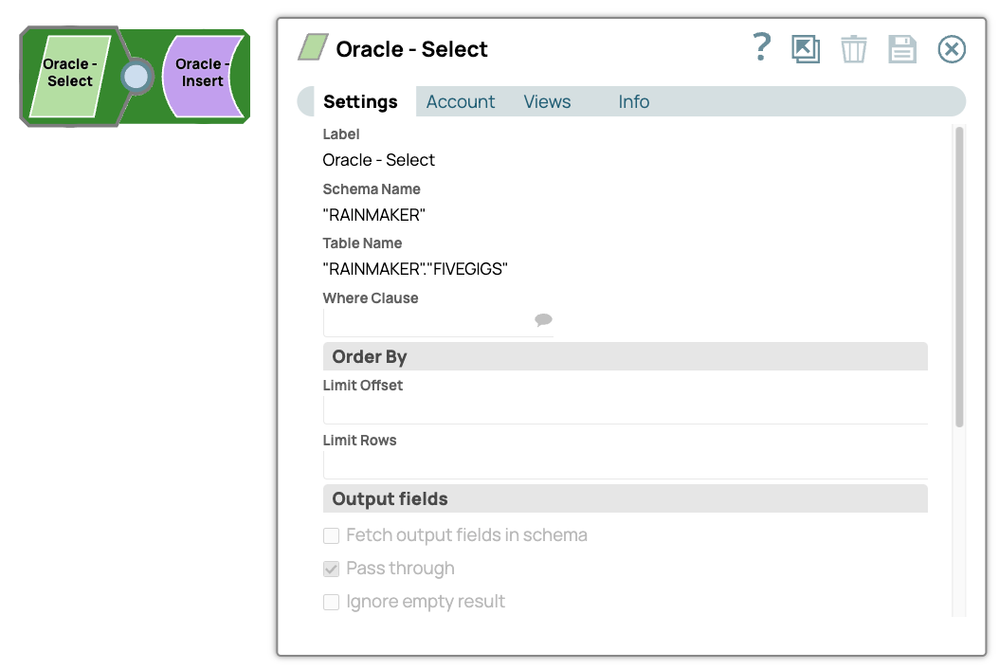

We loaded it with 5.8gig of data using a simple pipeline.

A single instance of the pipeline was able to transfer 5.8GB of data from Oracle SE RDS to Oracle EE RDS with a sustained throughput of 5.7k rows per second.

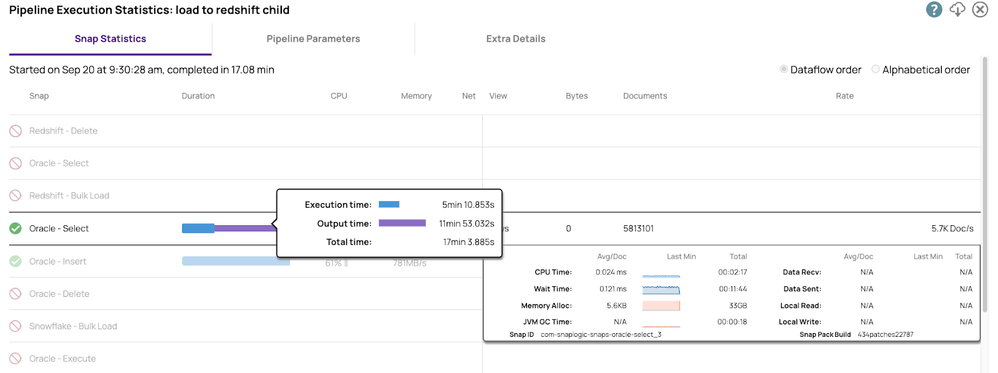

Here are performance numbers from the Oracle Select. We have detailed information about every relevant operational metric. This information is required for performance tuning.

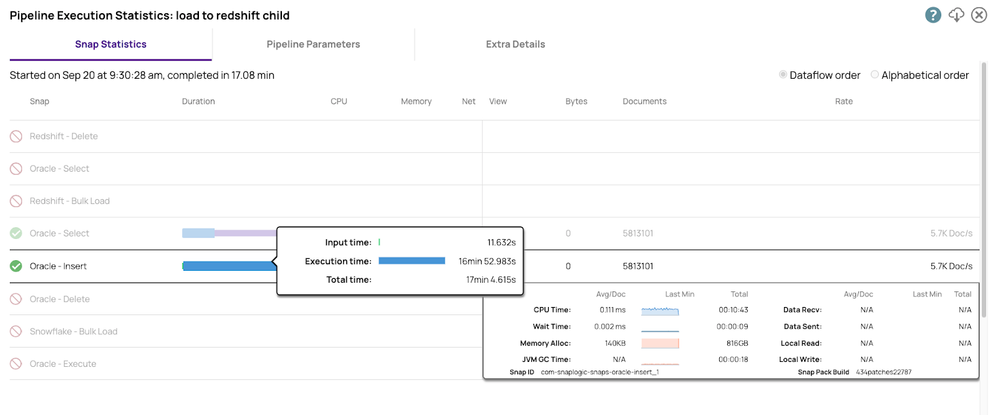

Here are numbers for the Insert into Enterprise Edition

Both instances of Oracle, the S3 buckets, and Redshift were all in the same AWS region to remove as much network latency as possible. While these numbers are interesting, the real test was between Oracle and Redshift.

The Cloudplex we used is a six-node plex made up of 4vCPUs, 16GB RAM nodes.

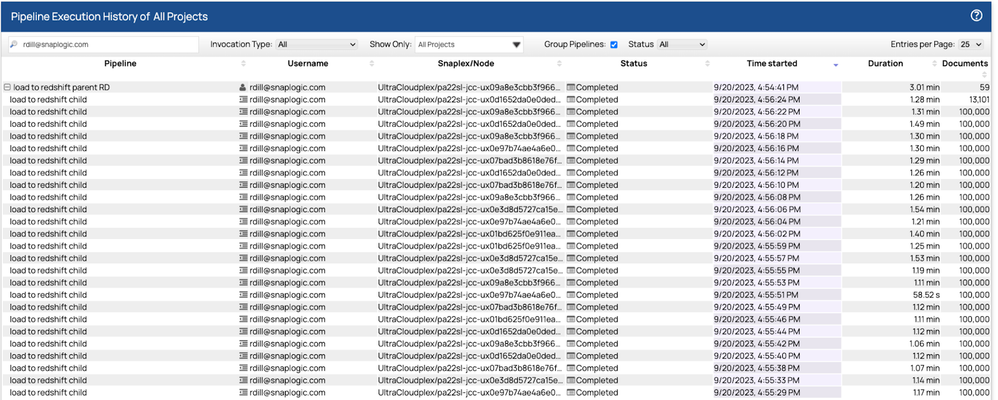

Once we had the data in Oracle, we took Matt’s pipelines and were able to load the data into Redshift in parallel using a pool of 32 child pipelines. The first run took a little over seven minutes. We tweaked the fetch and batch sizes, over three more runs the final time was, 5,813,101rows of data in 3.01 seconds.

Here are final results from the dashboard.

Each of the child pipelines read and wrote 100,000 rows in a range of time between 1.07 seconds to 1.31. The control plane load balanced the 32 child pipelines across the six nodes using a round robin, least used algorithm. The nodes would accept and run as many as they could. When they reached capacity, the new pipelines were staged and only started when the node had the capacity to run it. From start to finish was 3.01 seconds to run the parent pipeline and the 59 child instances.

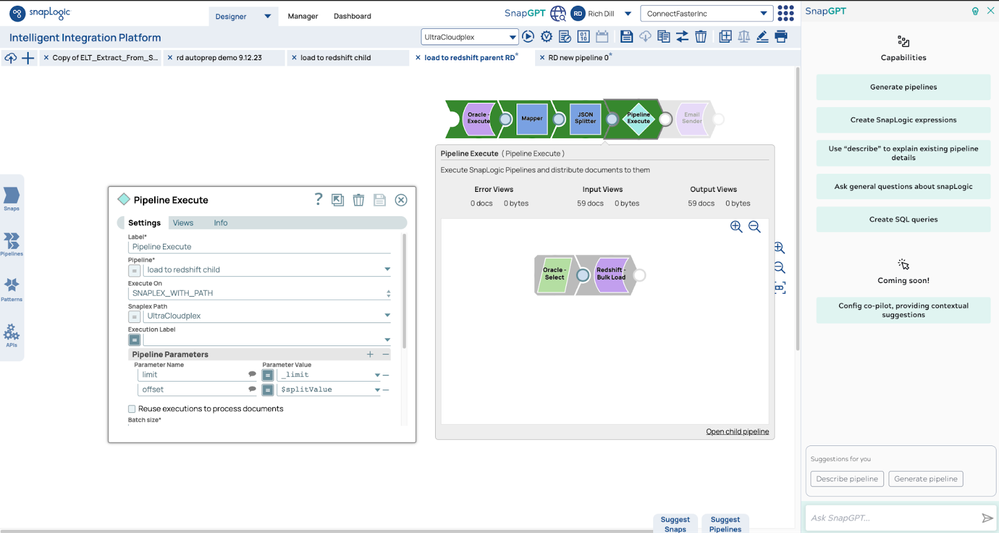

Here are the pipelines. The parent pipeline uses the count function to get the number of rows. We use that number to determine the number of child pipelines, in this case 5.9 million rows will be read by 59 pipelines, each pipeline will read and write 100,000 rows.

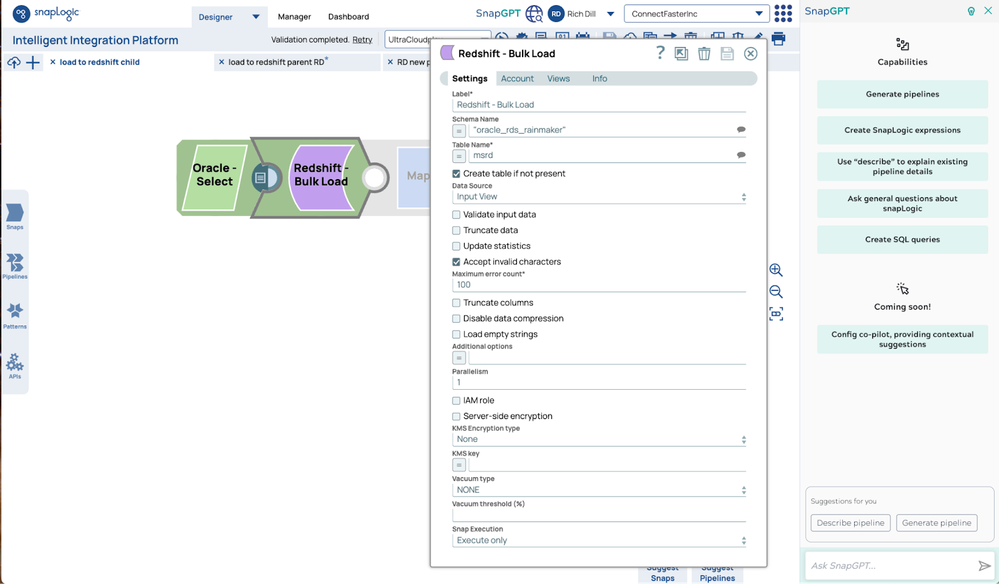

Here is the Redshift Bulk Load Snap configuration, standard settings are all that is needed to perform at scale.

We used a common pattern to answer a customer question in a couple of hours. We took an existing pipeline that Matt had, made a copy of it, and swapped out the old database load for the Redshift Bulk Load Snap and had tests running in minutes.

The test was done using gigabytes of data and six large nodes to model moving terabytes. To scale to the next level is easily done by simply increasing the number and size of nodes. The upper limit of the capacity of this pattern is based on three limits, the read and write speeds of the source and target, and network bandwidth. We have customers moving terabytes and petabytes everyday using patterns like this. It is a quick and easy task to move data at scale without writing complex SQL statements or code. Select the read and write Snaps you need, give them the right parameters and tune as much as you need to, and in minutes your task is done.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Giving Thanks with Our Latest November Product Release! in Release Notes and Announcements

- Multimodal Processing in LLM in SnapLogic Technical Blog

- Guide for Advanced GenAI App Patterns in SnapLogic Technical Blog

- August 2024 Release: Turbocharge Your GenAI Applications and Data Automation! in Release Notes and Announcements

- Join us for the May 2024 Product Webinar! in Release Notes and Announcements