- SnapLogic - Integration Nation

- Designing and Running Pipelines

- JSON data size fix

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-05-2022 08:20 PM

My source data has more than 1M record which is in a csv format. All those records had error , hence were routed to error view. Now , All those records needs to be logged in a S3 folder. Also i send an email to the team which contains the file name and location.

The data is loaded in a json format in S3 which is still fine but it takes a longer time to open the json file (which is obvious) but can we do this in a more efficient manner ?Sometime the log file does not load at all ☹️

Data must go to S3 folder but how we are storing it is open for discussion , like the records can be put in csv, txt or json format.

I had an idea of Splitting those records and saving it as 2-3 json file but now sure , if it is even appealing.

Any ideas ?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-06-2022 11:22 AM

I assume you are using the JSON Formatter to write the file, yes? But how are you formatting it? And what application are you using to try to open the file?

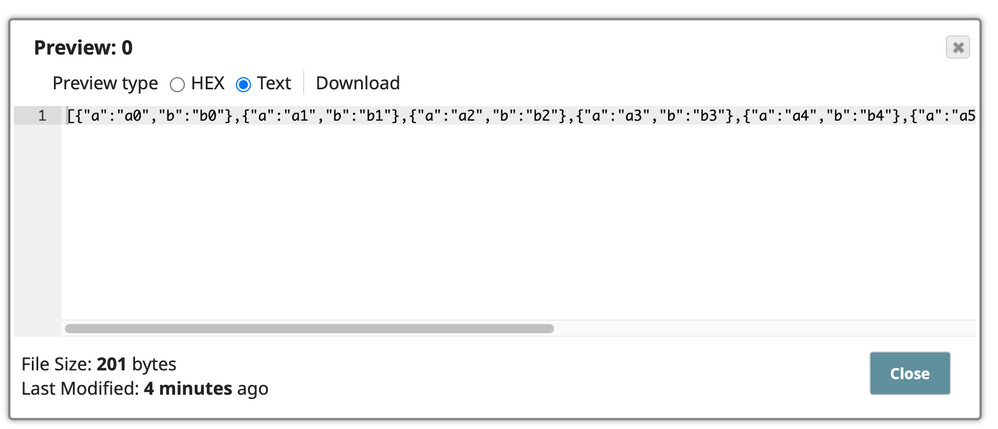

By default, the JSON Formatter will use a very compressed format with no line breaks. Some editors don’t deal well with a file where all the data is on one very long line.

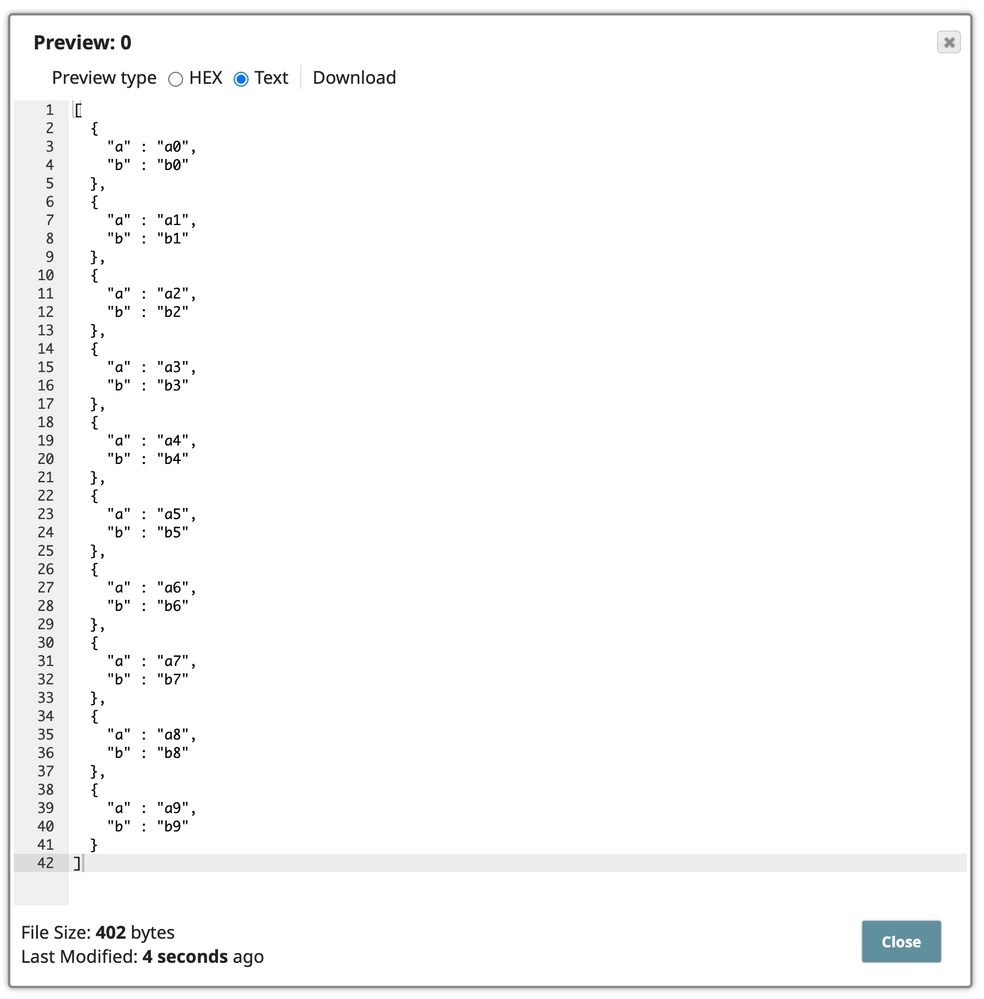

You could enable “Pretty Print” on the formatter, which produces a much more readable and verbose format like this:

But this format can also be a challenge for some JSON-capable applications since all of the data is inside a single JSON array.

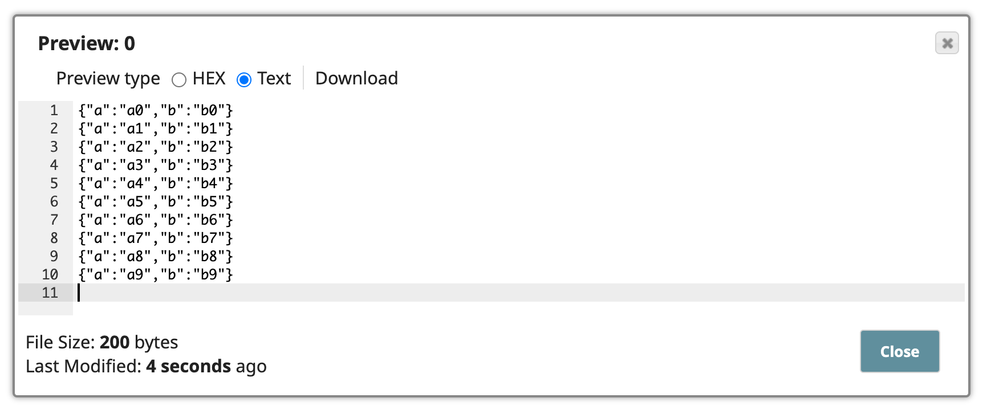

You might want to consider the “JSON Lines” format, where each line is a compactly formatted JSON object representing one document:

This is often the best choice when dealing with a “log”. Each JSON line corresponds to a line of your CSV file. See https://jsonlines.org/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-05-2022 09:56 PM

Do you compress the files before writing to S3? Compress snap with standard GZIP is what you need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-08-2022 09:22 PM

I had issues while trying to zip the file. Like, i could see a zipped file being created but when i went within the zip file, i could not see any file…

Do you have any samples ? so that i can try please?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-06-2022 11:22 AM

I assume you are using the JSON Formatter to write the file, yes? But how are you formatting it? And what application are you using to try to open the file?

By default, the JSON Formatter will use a very compressed format with no line breaks. Some editors don’t deal well with a file where all the data is on one very long line.

You could enable “Pretty Print” on the formatter, which produces a much more readable and verbose format like this:

But this format can also be a challenge for some JSON-capable applications since all of the data is inside a single JSON array.

You might want to consider the “JSON Lines” format, where each line is a compactly formatted JSON object representing one document:

This is often the best choice when dealing with a “log”. Each JSON line corresponds to a line of your CSV file. See https://jsonlines.org/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-08-2022 09:24 PM

I am using a JSON formatter and am using Notepad ++ for opening the file. I will try pretty format and update you. Thank you.

- Pipeline Execute snap - An in-depth look in Designing and Running Pipelines

- Data Validator Pattern error in Designing and Running Pipelines

- Parsing XML using a Python script returns an encoding error message in Designing and Running Pipelines

- Send Multiple HTML Tables in Email using XML Parser in Designing and Running Pipelines

- error 3 upstreams for join in Designing and Running Pipelines