- SnapLogic - Integration Nation

- Designing and Running Pipelines

- Re: Convert Base64 to HEX

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Convert Base64 to HEX

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-18-2021 02:03 PM

I am working on a Snaplogic pipeline to copy data from Oracle to Snowflake. A few tables at source have BLOB column which needs to be copied. Snaplogic sees them as “Base64 encoded data” but Snowflake needs the data in HEX format. I would like to know if its possible to convert the data as required and load into Snowflake tables. I’ve attached a sample pipeline.

TEST_2021_06_18.slp (7.1 KB)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-23-2021 02:08 PM

Hi Kory,

Thank you for responding. Somehow the compression doesn’t seem to work or doesn’t compress enough. I still get this error from Snaplogic/Snowflake:

Reason:

Snap errors: {ruuid=2fd41aae-8fe9-4d66-88ed-7ea0892d0fc3, label=Snowflake - Bulk Upsert, failure=Error creating or writing input data to the temp table “BWDOCS”.MERGE_TEMP_TABLE_8b8d9379_6356_4a04_bd1f_8352839a952d, reason=Max LOB size (16777216) exceeded, actual size of parsed column is 111438132

File ‘@~/INPUTVIEW/f39bd9bb-a877-4583-b9a0-5ea6cb9aa5f7/snowflakebuklload_data_31dd64d1-8f88-45f2-863e-68563a3eb9cb.tmp.gz’, line 1, character 111438138

Row 1, column “MERGE_TEMP_TABLE_8B8D9379_6356_4A04_BD1F_8352839A952D”[“$2”:2]

Note the “Max LOB size” displayed in the error above. That is how I got the impression that 16 MB is the max size. Maybe, Snaplogic or Snowflake is displaying incorrect information.

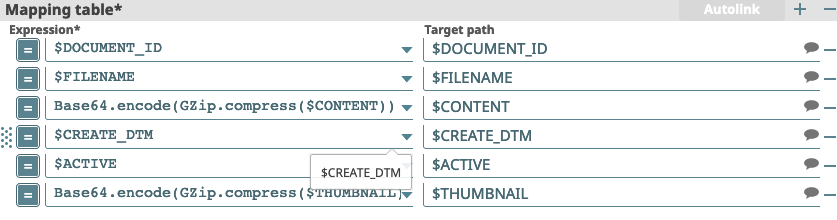

Here is the mapper setting as you suggested:

Also, I tested Oracle’s internal compression utility (utl_compress) on a few rows and the compression ratio isn’t that impressive. Maybe, because binary data is already compressed enough or for whatever reason. This is my test result:

select dbms_lob.getlength(content) orig_blob_size, dbms_lob.getlength(utl_compress.lz_compress(content)) compress_blob_size

from <<table_name>>

where dbms_lob.getlength(content) > (1010241024)

and rownum < 11

ORIG_BLOB_SIZE COMPRESS_BLOB_SIZE

83685170 83554366

10541235 10379628

17928157 17849033

220556537 220265485

15064844 10929200

96704516 80316259

15255342 15241613

15322232 15302694

17142022 17096265

20777303 20667900

As you can see, compressed size is almost the original size.

Thanks

Anand

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-24-2021 07:20 AM

I should probably have asked if you know what type of data is stored in that BLOB structure. When you try to compress data that is already compressed (e.g. image/audio/video), the compression won’t gain much and sometimes gets a bit bigger. So in this case, it appears that the data is likely already compressed.

You may need to find another storage model for this data outside Snowflake if it doesn’t fit in the max size field. As @ptaylor recommended, you should probably consult the Snowflake community for suggestions there.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-24-2021 09:12 AM

Thanks Kory. We are exploring various other options with Snowflake cust support.

- Converting a string to JSON doesn't work in Designing and Running Pipelines

- Convert array with objects into object with key/value pairs in Designing and Running Pipelines

- CSV parser cannot parse data in Designing and Running Pipelines

- Parsing XML using a Python script returns an encoding error message in Designing and Running Pipelines

- Change all Keys in JSON structure to lowercase in Designing and Running Pipelines