- SnapLogic - Integration Nation

- Designing and Running Pipelines

- Re: Pipeline Execute parallel + sequential executi...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Pipeline Execute parallel + sequential execution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-05-2017 11:13 AM

The documentation mentions below,

Execute a pipeline for every input document. If the target pipeline has an unlinked input view, the input document to this Snap will be passed into the unlinked input of the child execution. Similarly, if the target pipeline has an unlinked output view, the output document from the child execution will be used as the output document from the Pipeline Execute Snap.

Execute one or more pipelines and reuse them to process multiple input documents to this Snap. The child pipeline must have one unlinked input view and one unlinked output view. As documents are received by this Snap, they will be passed to the child executions for processing. Any output documents from the child executions will be used as the output of this Snap.

So as per what I interpret it is when we select the option of reusing the child pipeline execution is like if a pipeline is already executing and we get more input document for it then the same execution will process each of the document in sequential order as it is given as input.

But in case where a new pipeline is to be triggered for execution, in that case a parallel version of the new pipeline will be started without disturbing the existing execution of already running pipeline.

Please add if I am missing anything here, for our use case we want to use this functionality to make sure if we have 2 pipelines to be executed with 5 documents each in parallel but giving the documents in sequence to the already running pipeline.

So this feature will help us achieve this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-07-2017 09:50 AM

The result I want to achieve is as shown in the output view of “sort” snap the documents are sorted as per pipelinename and timestamp this input will be sent to pipeline execute and we want to have 3 instances each for unique pipelines and these instances should process the payload filed one by one.

Example,

if I send 5 input documents, the document has the name of the pipeline to be executed out of which 2 documents have p1-pipeline and 3 has p2-pipeline. Here I would like both p1 and p2 to start running in parallel but process payload in sequence.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-07-2017 09:56 AM

Can you run it without the reuse checkbox and see if that is what you want to achieve?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-07-2017 03:31 PM

Yes, the pool size governs all executions, regardless of the type of pipeline being executed.

As mentioned in another reply, if you enable reuse, the child pipeline to execute cannot change from one document to the next. Instead, you might try collecting all of the documents for a given child into a single document using the Group By Fields snap. The output of that snap can be sent to a PipeExec with reuse disabled and the pipeline to execute as an expression. Then, in the child pipeline, the first snap can be a JSON Splitter that breaks up the group into separate documents. This design should work in a fashion similar to what you are thinking.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-08-2017 05:49 AM

Thank you, it worked 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-07-2017 10:19 AM

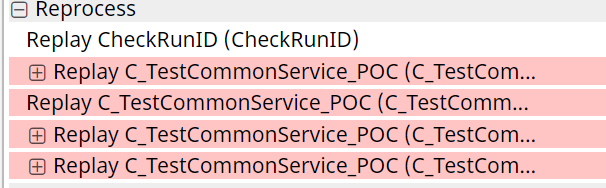

Without the reuse enabled, I get this,

Where as I want only one instance of each pipeline

- ELT Execute query execution flow in Designing and Running Pipelines

- Snaps execution in sequentially in Designing and Running Pipelines

- File writing using smb path in Designing and Running Pipelines

- Pipeline execute snap in an ultra task: pool size when reuse executions disabled in Designing and Running Pipelines

- Scheduled task in Designing and Running Pipelines