- SnapLogic - Integration Nation

- Patterns

- Re: Reference Implementation - Integration Pattern...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Reference Implementation - Integration Pattern - On-Prem Database to Cloud Datawarehouse

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-25-2018 09:33 AM

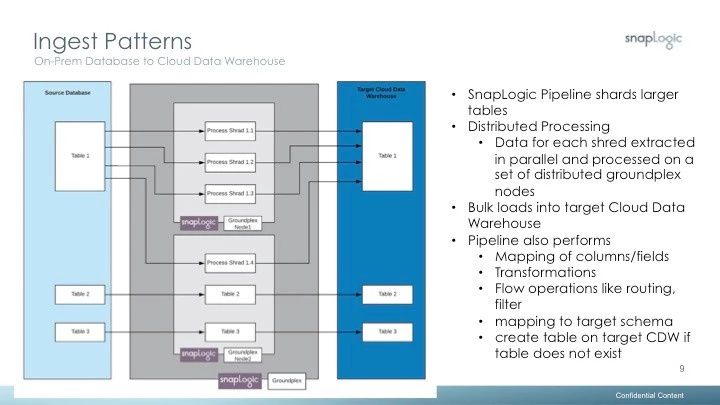

This is a common integration patterns that customers tend to use as they are moving from on-prem to cloud.

The following videos describe this integration pattern and the attached reference implementation pipelines help you get a head start on the implementation.

Part 1

Part 2

Pipelines Attached

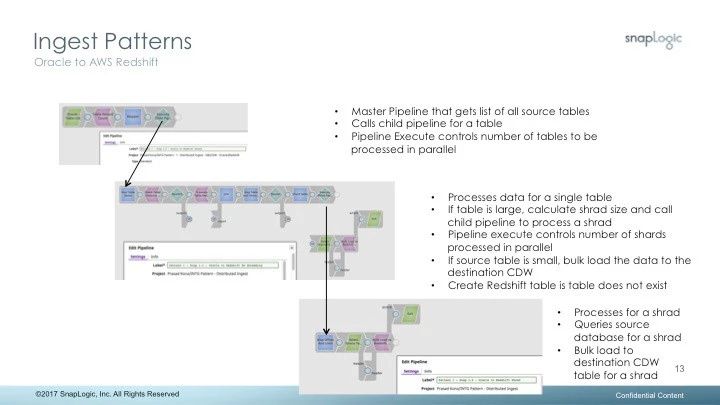

The pipeline moves all the tables from an Oracle database to Redshift. The pipelines are designed to use our best practices like parameterization of all aspects of the pipeline (eg. account names , db names, table names). All are dynamic and controlled via pipeline parameters.

Source: Oracle Tables

Target: Redshift Tables

Snaps used:

- Step 1: Oracle - Table List, Filter, Oracle - Execute, Mapper, Pipeline Execute

- Step 2: Mapper, Redshift - Execute, Router, Join, Oracle - Select, Shard Offsets*, Redshift - Bulk Load, Pipeline Execute, Exit, Union

- Step 3: Mapper, Oracle - Select, Redshift - Bulk Load, Exit

*This is a Snap developed by the SnapLogic Professional Services team.

Downloads

Pattern 1 - Step 1.0 - Oracle to Redshift Parent_2018_06_25.slp (9.4 KB)

Pattern 1 - Step 1.1 - Oracle to Redshift No Shredding_2018_06_25.slp (20.9 KB)

Pattern 1 - Step 1.2 - Oracle to Redshift Shred_2018_06_25.slp (7.7 KB)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-10-2018 10:34 PM

Like your pattern for the sharding, I have had to implement something similar. Your example pipelines calls the shard table snap. Non existent in my environment - wondering if this is generally available or is it custom?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-11-2018 08:31 AM

Shard offsets was created by our Professional Services team. Talk with you account manager for information about availability.

Diane Miller

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-11-2018 11:49 AM

I’m trying to make this pattern work for MSSQL to Snowflake. Do you have a working implementation to Snowflake. I cannot seem to get the bulk load to work from the MSSQL->Select snap to the Snowflake Bulk Loader. Is there functionality in the Oracle bulk loader that this needed for this pattern?

Lastly, I can make this work if I have a specific mapper and bulk loader for each table but that seems like a lot of redundant work (100+ tables worth).

Let me know.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-12-2018 01:21 PM

The oracle extract uses the limit offset to help with shrading the data. If you plan to ignore shrading the data then you can easily convert the existing pattern to support MSSQL and target as Snowflake… Set shrading as no in the pipeline parameters on the master pipeline.

Here is an example of a pattern that might also help that gets files on s3 and insert into snowflake .

- Multiple idocs to be sent at a time in Designing and Running Pipelines

- Move data from AWS S3 to Snowflake Data Warehouse in Patterns

- Reference Implementation - Integration Pattern - Error Handling and Retry Pattern in Patterns

- Reference Implementation - Integration Pattern - On-Prem Database to Cloud Datawarehouse in Patterns

- Workday Account Creation in Snap Packs