- SnapLogic - Integration Nation

- AI/ML & GenAI App Builder

- Guide for Advance GenAI App Pattern

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Guide for Advance GenAI App Pattern

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

7 hours ago

In the rapidly evolving field of Generative AI (GenAI), foundational knowledge can take you far, but it's the mastery of advanced patterns that truly empowers you to build sophisticated, scalable, and efficient applications. As the complexity of AI-driven tasks grows, so does the need for robust strategies that can handle diverse scenarios—from maintaining context in multi-turn conversations to dynamically generating content based on user inputs.

This guide delves into these advanced patterns, offering a deep dive into the strategies that can elevate your GenAI applications. Whether you're an admin seeking to optimize your AI systems or a developer aiming to push the boundaries of what's possible, understanding and implementing these patterns will enable you to manage and solve complex challenges with confidence.

1. Advanced Prompt Engineering

1.1 Comprehensive Control of Response Format

In GenAI applications, controlling the output format is crucial for ensuring that responses align with specific user requirements. Advanced prompt engineering allows you to craft prompts that provide precise instructions on how the AI should structure its output. This approach not only improves the consistency of responses but also makes them more aligned with the desired objectives.

For instance, you can design prompts with a detailed structure that includes multiple elements such as Context, Objective, Style, Audience, and desired Response Length. This method allows for granular control over the output. A sample prompt might look like this:

- Context: Provide background information on the topic to set the stage.

- Objective: Clearly define the purpose of the response.

- Style: Specify whether the response should be formal, informal, technical, or creative.

- Audience: Identify the target audience, which influences the language and depth of explanation.

- Response format: Instruct the AI to generate a response that takes approximately 3 minutes to read, ensuring depth and comprehensiveness, typically spanning 4-5 paragraphs.

This level of detail in prompt engineering ensures that the AI-generated content meets specific needs, making it suitable for various use cases, such as generating educational material, detailed reports, or customer communications.

1.2 Few-Shot Learning

Few-shot learning is an advanced technique where the AI model is provided with a small number of examples (often just a few) within the prompt to guide its output. This method is particularly powerful when dealing with tasks that require the model to understand and replicate complex patterns or formats with minimal input.

By incorporating examples directly into the prompt, you can train the model to produce more accurate and contextually appropriate responses even in scenarios where large amounts of training data are not available. This approach is invaluable in customizing the AI's behavior for niche applications where generalized training data may not suffice.

Example Prompt:

"Generate a product description for the following items. The description should be concise, highlight key features, and appeal to a target audience interested in quality and innovation.

Example 1:

- Product Name: SmartHome WiFi Thermostat

- Description: "The SmartHome WiFi Thermostat is your ultimate solution for energy-efficient temperature control. With seamless integration into your smart home ecosystem, it offers intuitive controls, customizable settings, and real-time energy usage reports. Designed for modern living, it ensures comfort while helping you save on energy bills."

Example 2:

- Product Name: EcoBrew Stainless Steel Coffee Maker

- Description: "The EcoBrew Stainless Steel Coffee Maker combines sustainability with top-tier performance. Made from durable stainless steel, this eco-friendly coffee maker brews the perfect cup every time, with minimal environmental impact. Ideal for the conscious coffee lover, it offers precision brewing and a sleek design that complements any kitchen."

New Item:

- Product Name: UltraLight Travel Backpack

Description:

AI-Generated Output:

"UltraLight Travel Backpack: The UltraLight Travel Backpack is engineered for the modern adventurer. Weighing just under a pound, it offers unmatched portability without sacrificing storage capacity. Featuring water-resistant fabric, multiple compartments, and ergonomic shoulder straps, this backpack is designed for those who need durability and convenience on the go. Perfect for day hikes, weekend getaways, or everyday use, the UltraLight Travel Backpack is your reliable companion on any journey."

Explanation:

In this example, the AI was given two product descriptions that demonstrate the desired style, tone, and structure of the output. When asked to generate a new description for the "UltraLight Travel Backpack," the AI used the patterns from the provided examples to create a similar, contextually appropriate product description. Despite only being shown two examples, the model effectively captured the key elements needed to generate a new, high-quality output.

This approach is highly beneficial when you need the AI to produce consistent results across similar tasks, especially in scenarios where creating extensive training data is impractical. By providing just a few examples, you guide the AI's understanding, allowing it to apply the learned pattern to new, but related, tasks.

1.3 Chain of Thought

The chain of thought patterns encourages the AI to generate responses that follow a logical sequence, mirroring human reasoning. This technique is particularly useful in complex scenarios where the AI needs to make decisions, solve problems, or explain concepts step-by-step.

By structuring prompts that lead the AI through a series of thought processes, you can guide it to produce more coherent and rational outputs. This is especially effective in applications requiring detailed explanations, such as scientific reasoning, technical problem-solving, or any situation where the AI needs to justify its conclusions.For instance, a prompt might instruct the AI to break down a complex problem into smaller, manageable parts and tackle each one sequentially. The AI would first identify the key components of the problem, then work through each one, explaining its reasoning at each step. This method not only enhances the clarity of the response but also improves the accuracy and relevance of the AI’s conclusions.

2. Multi-modal Processing

Multi-modal processing in Generative AI is a cutting-edge approach that allows AI systems to integrate and process multiple types of data—such as text, images, audio, and video—simultaneously. This capability is crucial for applications that require a deep understanding of content across different modalities, leading to more accurate and contextually enriched outputs.

For instance, in a scenario where an AI is tasked with generating a description of a scene from a video, multi-modal processing enables it to analyze both the visual elements and the accompanying audio to produce a description that reflects not just what is seen but also the context provided by sound. Similarly, when processing text and images together, such as in a captioning task, the AI can better understand the relationship between the words and the visual content, leading to more precise and relevant captions.

This advanced pattern is particularly beneficial in complex environments where understanding the nuances across different data types is key to delivering high-quality outputs. For example, in medical diagnostics, AI systems using multi-modal processing can analyze medical images alongside patient records and spoken notes to offer more accurate diagnoses. In customer service, AI can interpret and respond to customer queries by simultaneously analyzing text and voice tone, improving the quality of interactions.

Moreover, multi-modal processing enhances the AI's ability to learn from varied data sources, allowing it to build more robust models that generalize better across different tasks. This makes it an essential tool in the development of AI applications that need to operate in real-world scenarios where data is rarely homogeneous.

By leveraging multi-modal processing, AI systems can generate responses that are not only more comprehensive but also tailored to the specific needs of the task at hand, making them highly effective in a wide range of applications. As this technology continues to evolve, it promises to unlock new possibilities in fields as diverse as entertainment, education, healthcare, and beyond.

Example

In many situations, data may include both images and text that need to be analyzed together to gain comprehensive insights. To effectively process and integrate these different data types, you can utilize a multi-modal processing pipeline in SnapLogic. This approach allows the Generative AI model to simultaneously analyze data from both sources, maintaining the integrity of each modality.

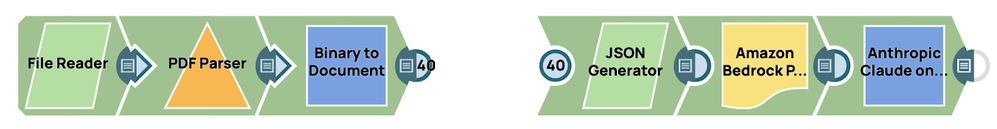

This pipeline is composed of two distinct stages. The first stage focuses on extracting images from the source data and converting them into base64 format. The second stage involves generating a prompt using advanced prompt engineering techniques, which is then fed into the Large Language Model (LLM). The visual representation of this process is divided into two parts, as shown in the picture above.

Extract the image from the source

- Add the File Reader Snap: Drag and drop the “File Reader” Snap onto the designer.

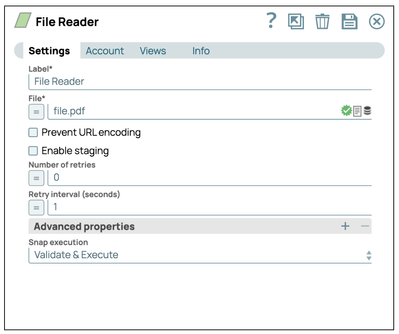

- Configure the File Reader Snap: Click on the “File Reader” Snap to access its settings panel. Then, select a file that contains images. In this case, we select a pdf file.

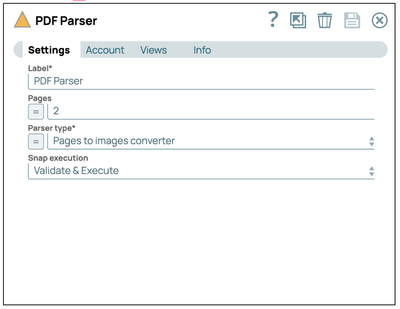

- Add the PDF Parser Snap: Drag and drop the “PDF Parser” Snap onto the designer and set the parser type to be “Pages to images converter”

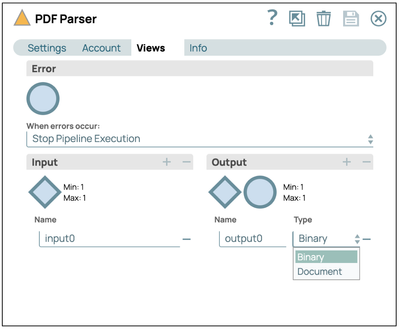

- Configure views: Click on the “Views” tab and then select the output to be “Binary”.

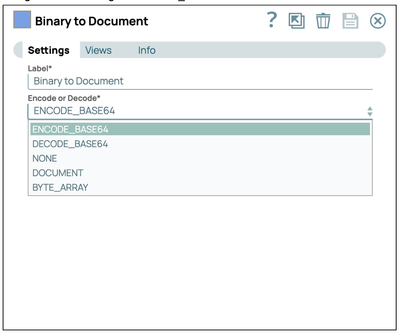

- Convert to Base64: Add and connect “Binary to Document” snap to the PDF Parser snap. Then, configure the encoding to ENCODE_BASE64.

Construct the prompt and send it to the GenAI

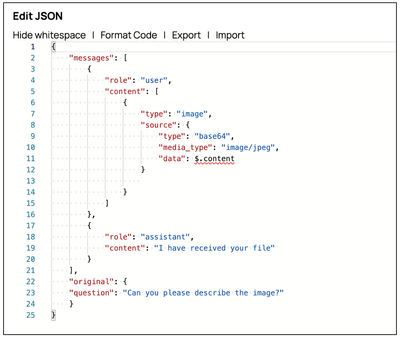

- Add a JSON Generator Snap: Drag the JSON Generator Snap and connect it to the preceding Mapper Snap. Then, click “Edit JSON” to modify the JSON string in the JSON editor mode. AWS Claude on Message allows you to send images via the prompt by configuring the source attribute within the content. You can construct the image prompt as demonstrated in the screenshot.

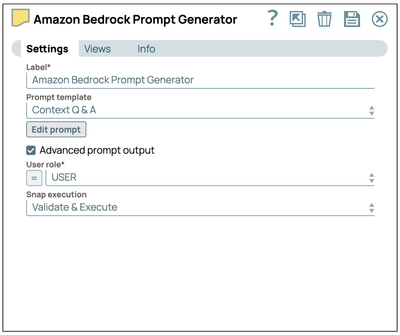

- Provide instruction with Prompt Generator: Add the prompt generator Snap and connect it to the JSON Generator Snap. Next, select the “Advanced Prompt Output” checkbox to enable the advanced prompt payload. Finally, click “Edit Prompt” to enter your specific instructions.

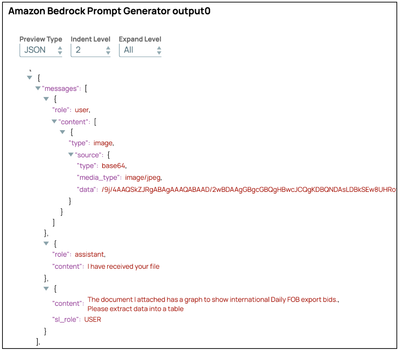

The advanced prompt output will be structured as an array of messages, as illustrated in the screenshot below.

- Send to GenAI: Add the AWS Claude on AWS Message Snap and enter your credentials to access the AWS Bedrock service. Ensure that the “Use Message Payload” checkbox is selected, and then configure the message payload using $messages, which is the output from the previous Snap.

After completing these steps, you can process the image using the LLM independently. This approach allows the LLM to focus on extracting detailed information from the image. Once the image has been processed, you can then combine this data with other sources, such as text or structured data, to generate a more comprehensive and accurate analysis. This multi-modal integration ensures that the insights derived from different data types are effectively synthesized, leading to richer and more precise results.

3. Semantic Caching

To optimize both the cost and response time associated with using Large Language Models (LLMs), implementing a semantic caching mechanism is a highly effective strategy. Semantic caching involves storing responses generated by the model and reusing them when the system encounters queries with the same or similar meanings. This approach not only enhances the overall efficiency of the system but also significantly reduces the operational costs tied to model usage.

The fundamental principle behind semantic caching is that many user queries are often semantically similar, even if they are phrased differently. By identifying and caching the responses to these semantically equivalent queries, the system can bypass the need to repeatedly invoke the LLM, which is resource-intensive. Instead, the system can quickly retrieve and return the cached response, leading to faster response times and a more seamless user experience.

From a cost perspective, semantic caching directly translates into savings. Each time the system serves a response from the cache rather than querying the LLM, it avoids the computational expense associated with generating a new response. This reduction in the number of LLM invocations directly correlates with lower service costs, making the solution more economically viable, particularly in environments with high query volumes.

Additionally, semantic caching contributes to system scalability. As the demand on the LLM grows, the caching mechanism helps manage the load more effectively, ensuring that response times remain consistent even as the system scales. This is crucial for maintaining the quality of service, especially in real-time applications where latency is a critical factor.

Implementing semantic caching as part of the LLM deployment strategy offers a dual benefit: optimizing response times for end-users and minimizing the operational costs of model usage. This approach not only enhances the performance and scalability of AI-driven systems but also ensures that they remain cost-effective and responsive as user demand increases.

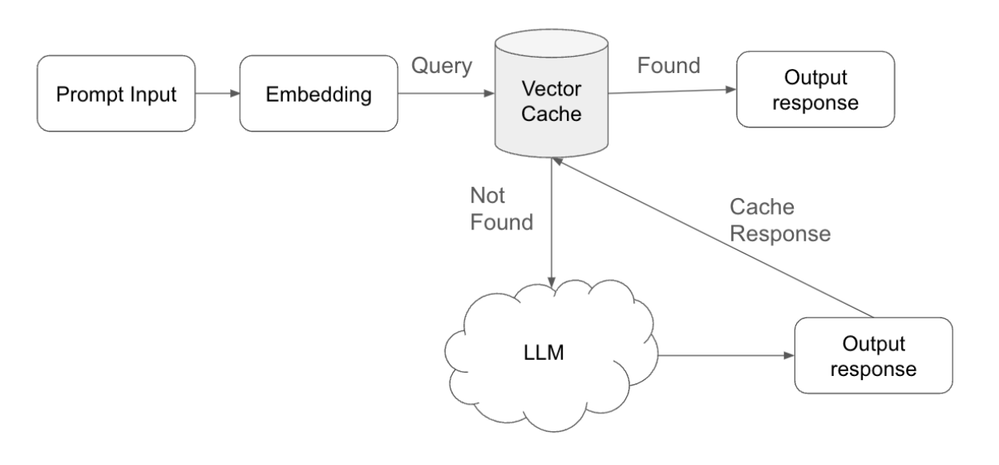

Implementation Concept for Semantic Caching

Semantic caching is a strategic approach designed to optimize both response time and computational efficiency in AI-driven systems. The implementation of semantic caching involves the following key steps:

- Query Submission and Vectorization: When a user submits a query, the system first processes this input by converting it into an embedding—a vectorized representation of the query. This embedding captures the semantic meaning of the query, enabling efficient comparison with previously stored data.

- Cache Lookup and Matching: The system then performs a lookup in the vector cache, which contains embeddings of previous queries along with their corresponding responses. During this lookup, the system searches for an existing embedding that closely matches the new query's embedding.

- Matching Threshold: A critical component of this process is the match threshold, which can be adjusted to control the sensitivity of the matching algorithm. This threshold determines how closely the new query needs to align with a stored embedding for the cache to consider it a match.

- Cache Hit and Response Retrieval: If the system identifies a match within the defined threshold, it retrieves the corresponding response from the cache. This "cache hit" allows the system to deliver the response to the user rapidly, bypassing the need for further processing. By serving responses directly from the cache, the system conserves computational resources and reduces response times.

- Cache Miss and LLM Processing: In cases where no suitable match is found in the cache—a "cache miss"—the system forwards the query to the Large Language Model (LLM). The LLM processes the query and generates a new response, ensuring that the user receives a relevant and accurate answer even for novel queries.

- Response Storage and Cache Management: After the LLM generates a new response, the system not only delivers this response to the user but also stores the response along with its associated query embedding back into the vector cache. This step ensures that if a similar query is submitted in the future, the system can serve the response directly from the cache, further optimizing the system’s efficiency.

- Time-to-Live (TTL) Adjustment: To maintain the relevance and accuracy of cached responses, the system can adjust the Time-to-Live (TTL) for each entry in the cache. The TTL determines how long a response remains valid in the cache before it is considered outdated and automatically removed. By fine-tuning the TTL settings, the system ensures that only up-to-date and contextually appropriate responses are served, thereby preventing the use of stale or irrelevant data.

Implement Semantic Caching in Snaplogic

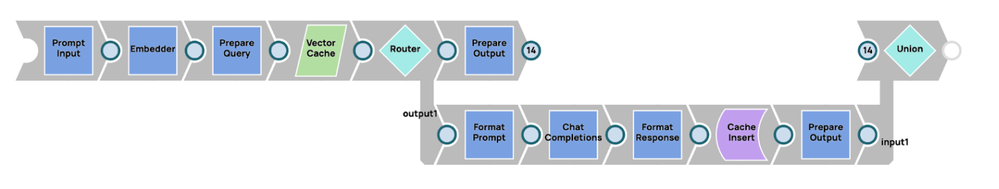

The concept of semantic caching can be effectively implemented within SnapLogic, leveraging its robust pipeline capabilities. Below is an outline of how this implementation can be achieved:

- Embedding the Query: The process begins with the embedding of the user’s query (prompt). Using SnapLogic's capabilities, an embedder, such as the Amazon Titan Embedder, is employed to convert the prompt into a vectorized representation. This embedding captures the semantic meaning of the prompt, making it suitable for comparison with previously stored embeddings.

- Vector Cache Lookup: Once the prompt has been embedded, the system proceeds to search for a matching entry in the vector cache. In this implementation, the Snowflake Vector Database serves as the vector cache, storing embeddings of past queries along with their corresponding responses. This lookup is crucial for determining whether a similar query has been processed before.

- Flow Routing with Router Snap: After the lookup, the system uses a Router Snap to manage the flow based on whether a match (cache hit) is found or not (cache miss). The Router Snap directs the workflow as follows:

- Cache Hit: If a matching embedding is found in the vector cache, the Router Snap routes the process to immediately return the cached response to the user. This ensures rapid response times by avoiding unnecessary processing.

- Cache Miss: If no match is found, the Router Snap directs the workflow to request a new response from the Large Language Model (LLM). The LLM processes the prompt and generates a new, relevant response.

- Storing and Responding: In the event of a cache miss, after the LLM generates a new response, the system not only sends this response to the user but also stores the new embedding and response in the Snowflake Vector Database for future use. This step enhances the efficiency of subsequent queries, as similar prompts can be handled directly from the cache.

4. Multiplexing AI Agents

Multiplexing AI agents refers to a strategy where multiple generative AI models, each specialized in a specific task, are utilized in parallel to address complex queries. This approach is akin to assembling a panel of experts, where each agent contributes its expertise to provide a comprehensive solution. Here is the key feature of using multiplexing AI Agents

- Specialization: A central advantage of multiplexing AI agents is the specialization of each agent in handling specific tasks or domains. Multiplexing ensures that responses are more relevant and accurate by assigning each AI model to a particular area of expertise. For example, one agent might be optimized for natural language understanding, another for technical problem-solving, and a third for summarizing complex data. This allows the system to handle multi-dimensional queries effectively, as each agent focuses on what it does best. This specialization significantly reduces the likelihood of errors or irrelevant responses, as the AI agents are tailored to their specific tasks.

In scenarios where a query spans multiple domains—such as asking a technical question with a business aspect—the system can route different parts of the query to the appropriate agent. This structured approach allows for extracting more relevant and accurate information, leading to a solution that addresses all facets of the problem. - Parallel Processing: Multiplexing AI agents take full advantage of parallel processing capabilities. By running multiple agents simultaneously, the system can tackle different aspects of a query at the same time, speeding up the overall response time. This parallel approach enhances both performance and scalability, as the workload is distributed among multiple agents rather than relying on a single model to process the entire task.

For example, in a customer support application, one agent could handle the analysis of a customer’s previous interactions while another agent generates a response to a technical issue, and yet another creates a follow-up action plan. Each agent works on its respective task in parallel, and the system integrates its outputs into a cohesive response. This method not only accelerates problem-solving but also ensures that different dimensions of the problem are addressed simultaneously. - Dynamic Task Allocation: In a multiplexing system, dynamic task allocation is crucial for efficiently distributing tasks among the specialized agents. A larger, general-purpose model, such as AWS Claude 3 Sonet, can act as an orchestrator, assessing the context of the query and determining which parts of the task should be delegated to smaller, more specialized agents. The orchestrator ensures that each task is assigned to the model best equipped to handle it.

For instance, if a user submits a complex query about legal regulations and data security, the general model can break down the query, sending legal-related questions to an AI agent specialized in legal analysis and security-related queries to a security-focused agent like TinyLlama or a similar model. This dynamic delegation allows for the most relevant models to be used at the right time, improving both the efficiency and accuracy of the overall response. - Integration of Outputs: Once the specialized agents have processed their respective tasks, the system must integrate their outputs to form a cohesive and comprehensive response. This integration is a critical feature of multiplexing, as it ensures that all aspects of a query are addressed without overlap or contradiction. The system combines the insights generated by each agent, creating a final output that reflects the full scope of the user’s request.

In many cases, the integration process also includes filtering or refining the outputs to remove any inconsistencies or redundancies, ensuring that the response is logical and cohesive. This collaborative approach increases the reliability of the system, as it allows different agents to complement one another’s knowledge and expertise.

Additionally, multiplexing reduces the likelihood of hallucinations—incorrect or nonsensical outputs that can sometimes occur with single, large-scale models. By dividing tasks among specialized agents, the system ensures that each part of the problem is handled by an AI that is specifically trained for that domain, minimizing the chance of erroneous or out-of-context responses. - Improved Accuracy and Contextual Understanding: Multiplexing AI agents contribute to improved overall accuracy by distributing tasks to models that are more finely tuned to specific contexts or subjects. This approach ensures that the AI system can better understand and address the nuances of a query, particularly when the input involves complex or highly specialized information. Each agent’s deep focus on a specific task leads to a higher level of precision, resulting in a more accurate final output.

Furthermore, multiplexing allows the system to build a more detailed contextual understanding. Since different agents are responsible for different elements of a task, the system can synthesize more detailed and context-aware responses. This holistic view is crucial for ensuring that the solution provided is not only accurate but also relevant to the specific situation presented by the user.

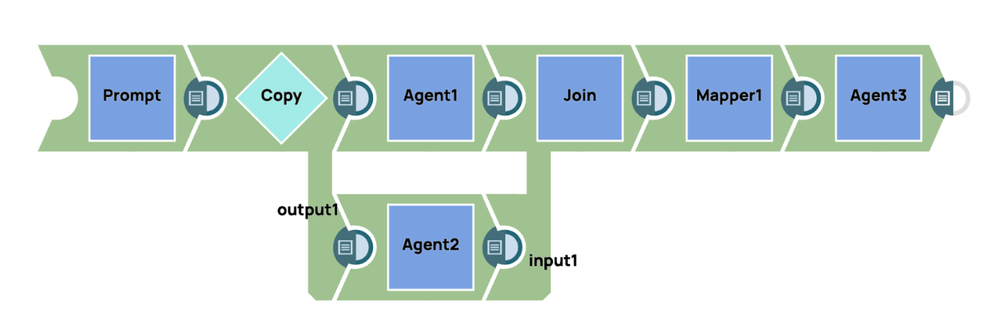

In SnapLogic, we offer comprehensive support for building advanced workflows by integrating our GenAI Builder Snap. This feature allows users to incorporate generative AI capabilities into their workflow automation processes seamlessly. By leveraging the GenAI Builder Snap, users can harness the power of artificial intelligence to automate complex decision-making, data processing, and content generation tasks within their existing workflows. This integration provides a streamlined approach to embedding AI-driven functionalities, enhancing both efficiency and precision across various operational domains. For instance, users can design workflows where the GenAI Builder Snap collaborates with other SnapLogic components, such as data pipelines and transformation processes, to deliver intelligent, context-aware automation tailored to their unique business needs.

In the example pipelines, the system sends a prompt simultaneously to multiple AI agents, each with its specialized area of expertise. These agents independently process the specific aspects of the prompt related to their specialization. Once the agents generate their respective outputs, the results are then joined together to form a cohesive response. To further enhance the clarity and conciseness of the final output, a summarization agent is employed. This summarization agent aggregates and refines the detailed responses from each specialized agent, distilling the information into a concise, unified summary that captures the key points from all the agents, ensuring a coherent and well-structured final response.

5. Multi-agent conversation

Multi-agent conversation refers to the interaction and communication between multiple autonomous agents, typically AI systems, working together to achieve a shared goal. This framework is widely used in areas like collaborative problem-solving, multi-user systems, and complex task coordination where multiple perspectives or expertise areas are required. Unlike a single-agent conversation, where one AI handles all inputs and outputs, a multi-agent system divides tasks among several specialized agents, allowing for greater efficiency, deeper contextual understanding, and enhanced problem-solving capabilities. Here are the key features of using multi-agent conversations.

- Specialization and Expertise: Each agent in a multi-agent system is designed with a specific role or domain of expertise. This allows the system to leverage agents with specialized capabilities to handle different aspects of a task. For example, one agent might focus on natural language processing (NLP) to understand input, while another might handle complex calculations or retrieve data from external sources. This division of labor ensures that tasks are processed by the most capable agents, leading to more accurate and efficient results. Specialization reduces the likelihood of errors and allows for a deeper, domain-specific understanding of the problem.

- Collaboration and Coordination: In a multi-agent conversation, agents don’t work in isolation—they collaborate to achieve a shared goal. Each agent contributes its output to the broader conversation, sharing information and coordinating actions to ensure that the overall task is completed successfully. This collaboration is crucial when handling complex problems that require input from multiple domains. Effective coordination ensures that agents do not duplicate work or cause conflicts. Through predefined protocols or negotiation mechanisms, agents are able to work together harmoniously, producing a coherent solution that integrates their various inputs.

- Scalability: Multi-agent systems are inherently scalable, making them ideal for handling increasingly complex tasks. As the system grows in complexity or encounters new challenges, additional agents with specific skills can be introduced without overloading the system. Each agent can work independently, and the system's modular design allows for smooth expansion. Scalability ensures that the system can handle larger datasets, more diverse inputs, or more complex tasks as the environment evolves. This adaptability is essential in dynamic environments where workloads or requirements change over time.

- Distributed Decision-Making: In a multi-agent system, decision-making is often decentralized, meaning each agent has the autonomy to make decisions based on its expertise and the information available to it. This distributed decision-making process allows agents to handle tasks in parallel, without needing constant oversight from a central controller. Since agents can operate independently, decisions are made more quickly, and bottlenecks are avoided. This decentralized approach also enhances the system's resilience, as it avoids over-reliance on a single decision point and enables more adaptive and localized problem-solving.

- Fault Tolerance and Redundancy: Multi-agent systems are naturally resilient to errors and failures. Since each agent operates independently, the failure of one agent does not disrupt the entire system. Other agents can continue their tasks or, if necessary, take over the work of a failed agent. This built-in redundancy ensures the system can continue functioning even when some agents encounter issues. Fault tolerance is particularly valuable in complex systems, as it enhances reliability and minimizes downtime, allowing the system to maintain performance even under adverse conditions.

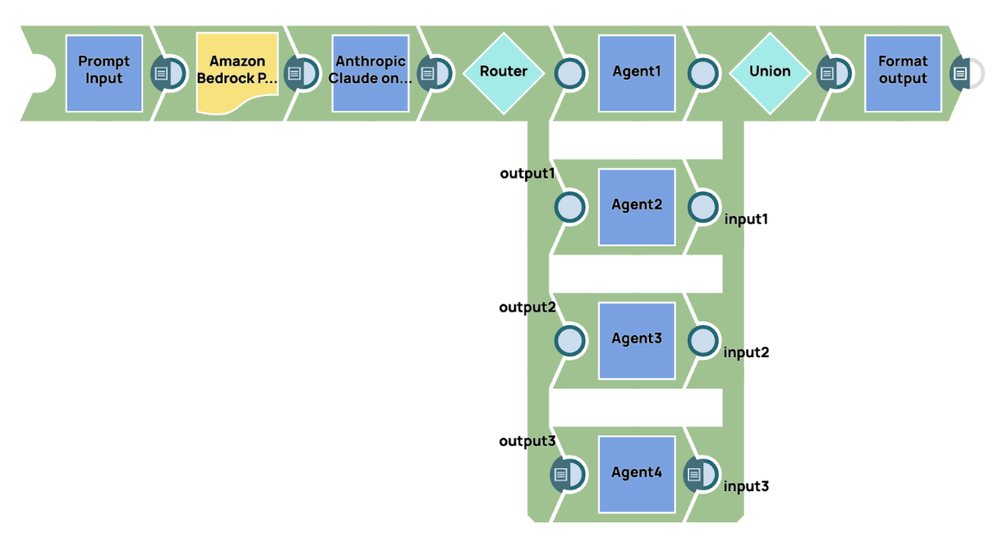

SnapLogic provides robust capabilities for integrating workflow automation with Generative AI (GenAI), allowing users to seamlessly build advanced multi-agent conversation systems by combining the GenAI Snap with other Snaps within their pipeline. This integration enables users to create sophisticated workflows where multiple AI agents, each with their specialization, collaborate to process complex queries and tasks.

In this example, we demonstrate a simple implementation of a multi-agent conversation system, leveraging a manager agent to oversee and control the workflow. The process begins by submitting a prompt to a large foundational model, which, in this case, is AWS Claude 3 Sonet. This model acts as the manager agent responsible for interpreting the prompt and determining the appropriate routing for different parts of the task. Based on the content and context of the prompt, the manager agent makes decisions on how to distribute the workload across specialized agents.

After the initial prompt is processed, we utilize the Router Snap to dynamically route the output to the corresponding specialized agents. Each agent is tailored to handle a specific domain or task, such as data analysis, natural language processing, or knowledge retrieval, ensuring that the most relevant and specialized agent addresses each part of the query.

Once the specialized agents have completed their respective tasks, their outputs are gathered and consolidated. The system then sends the final, aggregated result to the output destination. This approach ensures that all aspects of the query are addressed efficiently and accurately, with each agent contributing its expertise to the overall solution.

The flexibility of SnapLogic’s platform, combined with the integration of GenAI models and Snaps, makes it easy for users to design, scale, and optimize complex multi-agent conversational workflows. By automating task routing and agent collaboration, SnapLogic enables more intelligent, scalable, and context-aware solutions for addressing a wide range of use cases, from customer service automation to advanced data processing.

6. Retrieval Augment Generation (RAG)

To enhance the specificity and relevance of responses generated by a Generative AI (GenAI) model, it is crucial to provide the model with sufficient context. Contextual information helps the model understand the nuances of the task at hand, enabling it to generate more accurate and meaningful outputs. However, in many cases, the amount of context needed to fully inform the model exceeds the token limit that the model can process in a single prompt. This is where a technique known as Retrieval-Augmented Generation (RAG) becomes particularly valuable.

RAG is designed to optimize the way context is fed into the GenAI model. Rather than attempting to fit all the necessary information into the limited input space, RAG utilizes a retrieval mechanism that dynamically sources relevant information from an external knowledge base. This approach allows users to overcome the token limit challenge by fetching only the most pertinent information at the time of query generation, ensuring that the context provided to the model remains focused and concise.

The RAG framework can be broken down into two primary phases:

- Embedding Knowledge into a Vector Database: In the initial phase, the relevant content is embedded into a vector space using a machine learning model that transforms textual data into a format conducive to similarity matching. This embedding process effectively converts text into vectors, making it easier to store and retrieve later based on its semantic meaning. Once embedded, the knowledge is stored in a vector database for future access.

In SnapLogic, embedding knowledge into a vector database can be accomplished through a streamlined pipeline designed for efficiency and scalability. The process begins with reading a PDF file using the File Reader Snap, followed by extracting the content with the PDF Parser Snap, which converts the document into a structured text format. Once the text is available, the Chunker Snap is used to intelligently segment the content into smaller, manageable chunks. These chunks are specifically sized to align with the input constraints of the model, ensuring optimal performance during later stages of retrieval.

After chunking the text, each segment is processed and embedded into a vector representation, which is then stored in the vector database. This enables efficient similarity-based retrieval, allowing the system to quickly access relevant pieces of information as needed. By utilizing this pipeline in SnapLogic, users can easily manage and store large volumes of knowledge in a way that supports high-performance, context-driven AI applications. - Retrieving Context through Similarity Matching: When a query is received, the system performs similarity matching to retrieve the most relevant content from the vector database. By evaluating the similarity between the embedded query and the stored vectors, RAG identifies the most pertinent pieces of information, which are then used to augment the input prompt. This step ensures that the GenAI model receives focused and contextually enriched data, allowing it to generate more insightful and accurate responses.

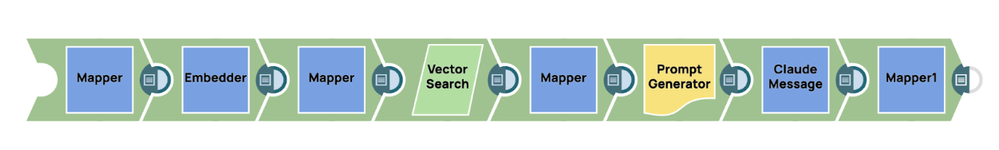

To retrieve relevant context from the vector database in SnapLogic, users can leverage an embedder snap, such as the AWS Titan Embedder, to transform the incoming prompt into a vector representation. This vector serves as the key for performing a similarity-based search within the vector database where the previously embedded knowledge is stored. The vector search mechanism efficiently identifies the most relevant pieces of information, ensuring that only the most contextually appropriate content is retrieved.

Once the pertinent knowledge is retrieved, it can be seamlessly integrated into the overall prompt-generation process. This is typically achieved by feeding the retrieved context into a prompt generator snap, which structures the information in a format optimized for use by the Generative AI model. In this case, the final prompt, enriched with the relevant context, is sent to the GenAI Snap, such as Anthropic Claude within the AWS Messages Snap. This approach ensures that the model receives highly specific and relevant information, ultimately enhancing the accuracy and relevance of its generated responses.

By implementing RAG, users can fully harness the potential of GenAI models, even when dealing with complex queries that demand a significant amount of context. This approach not only enhances the accuracy of the model's responses but also ensures that the model remains efficient and scalable, making it a powerful tool for a wide range of real-world applications.

7. Tool Calling and Contextual instruction

Traditional GenAI models are limited by the data they were trained on. Once trained, these models cannot access new or updated information unless they are retrained. This limitation means that without external input, models can only generate responses based on the static content within their training corpus. However, in a world where data is constantly evolving, relying on static knowledge is often inadequate, especially for tasks that require current or real-time information. In many real-world applications, Generative AI (GenAI) models need access to real-time data to generate contextually accurate and relevant responses. For example, if a user asks for the current weather in a particular location, the model cannot rely solely on pre-trained knowledge, as this data is dynamic and constantly changing. In such scenarios, traditional prompt engineering techniques are insufficient, as they primarily rely on static information that was available at the time of the model's training. This is where the tool-calling technique becomes invaluable.

Tool calling refers to the ability of a GenAI model to interact with external tools, APIs, or databases to retrieve specific information in real-time. Instead of relying on its internal knowledge, which may be outdated or incomplete, the model can request up-to-date data from external sources and use it to generate a response that is both accurate and contextually relevant. This process significantly expands the capabilities of GenAI, allowing it to move beyond static, pre-trained content and incorporate dynamic, real-world data into its responses.

For instance, when a user asks for live weather updates, stock market prices, or traffic conditions, the GenAI model can trigger a tool call to an external API—such as a weather service, financial data provider, or mapping service—to fetch the necessary data. This fetched data is then integrated into the model’s response, enabling it to provide an accurate and timely answer that would not have been possible using static prompts alone.

Contextual instruction plays a critical role in the tool calling process. Before calling an external tool, the GenAI model must understand the nature of the user’s request and identify when external data is needed. For example, if a user asks, "What is the weather like in Paris right now?" the model recognizes that the question requires real-time weather information and that this cannot be answered based on internal knowledge alone. The model is thus programmed to trigger a tool call to a relevant weather service API, retrieve the live weather data for Paris, and incorporate it into the final response. This ability to understand and differentiate between static knowledge (which can be answered with pre-trained data) and dynamic, real-time information (which requires external tool calling) is essential for GenAI models to operate effectively in complex, real-world environments.

Use Cases for Tool Calling

- Real-Time Data Retrieval: GenAI models can call external APIs to retrieve real-time data such as weather conditions, stock prices, news updates, or live sports scores. These tool calls ensure that the AI provides up-to-date and accurate responses that reflect the latest information.

- Complex Calculations and Specialized Tasks: Tool calling allows AI models to handle tasks that require specific calculations or domain expertise. For instance, an AI model handling a financial query can call an external financial analysis tool to perform complex calculations or retrieve historical stock market data.

- Integration with Enterprise Systems: In business environments, GenAI models can interact with external systems such as CRM platforms, ERP systems, or databases to retrieve or update information in real time. For example, a GenAI-driven customer service bot can pull account information from a CRM system or check order statuses from an external order management tool.

- Access to Specialized Knowledge: Tool calling allows AI models to fetch specialized information from databases or knowledge repositories that fall outside their domain of training. For example, a medical AI assistant could call an external database of medical research papers to provide the most current treatment options for a particular condition.

Implementation of Tool Calling in Generative AI Systems

Tool calling has become an integral feature in many advanced Generative AI (GenAI) models, allowing them to extend their functionality by interacting with external systems and services. For instance, AWS Anthropic Claude supports tool calling via the Message API, providing developers with a structured way to integrate external data and functionality directly into the model's response workflow. This capability allows the model to enhance its responses by incorporating real-time information, performing specific functions, or utilizing external APIs that provide specialized data beyond the model's training.

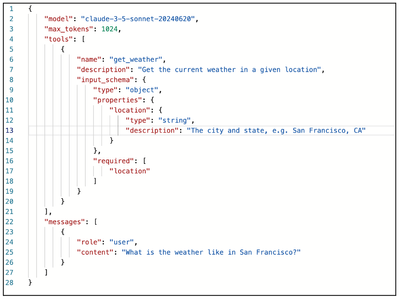

To implement tool calling with AWS Anthropic Claude, users can leverage the Message API, which allows for seamless integration with external systems. The tool calling mechanism is activated by sending a message with a specific "tools" parameter. This parameter defines how the external tool or API will be called, using a JSON schema to structure the function call. This approach enables the GenAI model to recognize when external input is required and initiate a tool call based on the instructions provided.

Implementation process

- Defining the Tool Schema: To initiate a tool call, users need to send a request with the "tools" parameter. This parameter is defined in a structured JSON schema, which includes details about the external tool or API that the GenAI model will call. The JSON schema outlines how the tool should be used, including the function name, parameters, and any necessary inputs for making the call.

For example, if the tool is a weather API, the schema might define parameters such as location and time, allowing the model to query the API with these inputs to retrieve current weather data. - Message Structure and Request Initiation: Once the tool schema is defined, the user can send a message to AWS Anthropic Claude containing the "tools" parameter alongside the prompt or query. The model will then interpret the request and, based on the context of the conversation or task, determine if it needs to call the external tool specified in the schema. If a tool call is required, the model will respond with a "stop_reason" value of "tool_use". This response indicates that the model is pausing its generation to call the external tool, rather than completing the response using only its internal knowledge.

- Tool Call Execution: When the model responds with "stop_reason": "tool_use", it signals that the external API or function should be called with the inputs provided. At this point, the external API (as specified in the JSON schema) is triggered to fetch the required data or perform the designated task. For example, if the user asks, "What is the weather in New York right now?", and the JSON schema defines a weather API tool, the model will pause and call the API with the location parameter set to "New York" and the time parameter set to "current."

- Handling the API Response: After the external tool processes the request and returns the result, the user (or system) sends a follow-up message containing the "tool_result". This message includes the output from the tool call, which can then be integrated into the ongoing conversation or task. In practice, this might look like a weather API returning a JSON object with temperature, humidity, and weather conditions. The response is passed back to the GenAI model via a user message, which contains the "tool_result" data.

- Final Response Generation: Once the model receives the "tool_result", it processes the data and completes the response. This allows the GenAI model to provide a final answer that incorporates real-time or specialized information retrieved from the external system. In our weather example, the final response might be, "The current weather in New York is 72°F with clear skies."

Currently, SnapLogic does not yet provide native support for tool calling within the GenAI Snap Pack. However, we recognize the immense potential and value this feature can bring to users, enabling seamless integration with external systems and services for real-time data and advanced functionalities. We are actively working on incorporating tool calling capabilities into future updates of the platform. This enhancement will further empower users to build more dynamic and intelligent workflows, expanding the possibilities of automation and AI-driven solutions. We are excited about the potential it holds and look forward to sharing these innovations soon

8. Memory Cognition for LLMs

Most large language models (LLMs) operate within a context window limitation, meaning they can only process and analyze a finite number of tokens (words, phrases, or symbols) at any given time. This limitation poses significant challenges, particularly when dealing with complex tasks, extended dialogues, or interactions that require long-term contextual understanding. For example, if a conversation or task extends beyond the token limit, the model loses awareness of earlier portions of the interaction, leading to responses that may become disconnected, repetitive, or contextually irrelevant.

This limitation becomes especially problematic in applications where maintaining continuity and coherence across long interactions is crucial. In customer service scenarios, project management tools, or educational applications, it is often necessary to remember detailed information from earlier exchanges or to track progress over time. However, traditional models constrained by a fixed token window struggle to maintain relevance in such situations, as they are unable to "remember" or access earlier parts of the conversation once the context window is exceeded.

To address these limitations and enable LLMs to handle longer and more complex interactions, we employ a technique known as memory cognition. This technique extends the capabilities of LLMs by introducing mechanisms that allow the model to retain, recall, and dynamically integrate past interactions or information, even when those interactions fall outside the immediate context window.

Memory Cognition Components in Generative AI Applications

To successfully implement memory cognition in Generative AI (GenAI) applications, a comprehensive and structured approach is required. This involves integrating various memory components that work together to enable the AI system to retain, retrieve, and utilize relevant information across different interactions. Memory cognition enables the AI model to go beyond stateless, short-term processing, creating a more context-aware, adaptive, and intelligent system capable of long-term interaction and decision-making. Here are the key components of memory cognition that must be considered when developing a GenAI application:

- Short-Term Memory (Session Memory)

Short-term memory, commonly referred to as session memory, encompasses the model's capability to retain context and information during a single interaction or session. This component is vital for maintaining coherence in multi-turn conversations and short-term tasks. It enables the model to sustain continuity in its responses by referencing earlier parts of the conversation, thereby preventing the user from repeating previously provided information.

Typically, short-term memory is restricted to the duration of the interaction. Once the session concludes or a new session begins, the memory is either reset or gradually decayed. This ensures the model can recall relevant details from earlier in the same session, creating a more seamless and fluid conversational experience. For example, in a customer service chatbot, short-term memory allows the AI to remember a customer’s issue throughout the conversation, ensuring that the problem is consistently addressed without needing the user to restate it multiple times.

However, in large language models, short-term memory is often limited by the model's context window, which is constrained by the maximum number of tokens it can process in a single prompt. As new input is added during the conversation, older dialogue parts may be discarded or forgotten, depending on the token limit. This necessitates careful management of short-term memory to ensure that critical information is retained throughout the session.

- Long-Term Memory

Long-term memory significantly enhances the model's capability by allowing it to retain information beyond the scope of a single session. Unlike short-term memory, which is confined to a single interaction, long-term memory persists across multiple interactions, enabling the AI to recall important information about users, their preferences, past conversations, or task-specific details, regardless of the time elapsed between sessions. This type of memory is typically stored in an external database or knowledge repository, ensuring it remains accessible over time and does not expire when a session ends.

Long-term memory is especially valuable in applications that require the retention of critical or personalized information, such as user preferences, history, or recurring tasks. It allows for highly personalized interactions, as the AI can reference stored information to tailor its responses based on the user's previous interactions. For example, in virtual assistant applications, long-term memory enables the AI to remember a user's preferences—such as their favorite music or regular appointment times—and use this information to provide customized responses and recommendations.

In enterprise environments, such as customer support systems, long-term memory enables the AI to reference previous issues or inquiries from the same user, allowing it to offer more informed and tailored assistance. This capability enhances the user experience by reducing the need for repetition and improving the overall efficiency and effectiveness of the interaction. Long-term memory, therefore, plays a crucial role in enabling AI systems to deliver consistent, contextually aware, and personalized responses across multiple sessions.

- Memory Management

Dynamic memory management refers to the AI model’s ability to intelligently manage and prioritize stored information, continuously adjusting what is retained, discarded, or retrieved based on its relevance to the task at hand. This capability is crucial for optimizing both short-term and long-term memory usage, ensuring that the model remains responsive and efficient without being burdened by irrelevant or outdated information. Effective dynamic memory management allows the AI system to adapt its memory allocation in real-time, based on the immediate requirements of the conversation or task.

In practical terms, dynamic memory management enables the AI to prioritize important information, such as key facts, user preferences, or contextually critical data, while discarding or de-prioritizing trivial or outdated details. For example, during an ongoing conversation, the system may focus on retaining essential pieces of information that are frequently referenced or highly relevant to the user’s current query, while allowing less pertinent information to decay or be removed. This process ensures that the AI can maintain a clear focus on what matters most, enhancing both accuracy and efficiency.

To facilitate this, the system often employs relevance scoring mechanisms to evaluate and rank the importance of stored memories. Each piece of memory can be assigned a priority score based on factors such as how frequently it is referenced or its importance to the current task. Higher-priority memories are retained for longer periods, while lower-priority or outdated entries may be marked for removal. This scoring system helps prevent memory overload by ensuring that only the most pertinent information is retained over time.

Dynamic memory management also includes memory decay mechanisms, wherein older or less relevant information gradually "fades" or is automatically removed from storage, preventing memory bloat. This ensures that the AI retains only the most critical data, avoiding inefficiencies and ensuring optimal performance, especially in large-scale applications that involve substantial amounts of data or memory-intensive operations.

To further optimize resource usage, automated processes can be implemented to "forget" memory entries that have not been referenced for a significant amount of time or are no longer relevant to ongoing tasks. These processes ensure that memory resources, such as storage and processing power, are allocated efficiently, particularly in environments with large-scale memory requirements. By dynamically managing memory, the AI can continue to provide contextually accurate and timely responses while maintaining a balanced and efficient memory system.

Implementation of memory cognition in Snaplogic

SnapLogic provides robust capabilities for integrating with databases and storage systems, making it an ideal platform for creating workflows to manage memory cognition in AI applications. In the following example, we demonstrate a basic memory cognition pattern using SnapLogic to handle both short-term and long-term memory.

Overview of the Workflow

The workflow begins by embedding the prompt into a vector representation. This vector is then used to retrieve relevant memories from long-term memory storage. Long-term memory can be stored in a vector database, which is well-suited for similarity-based retrieval, or in a traditional database or key-value store, depending on the application requirements. Similarly, short-term memory can be stored in a regular database or a key-value store to keep track of recent interactions.

- Retrieving Memories

Once the prompt is embedded, we retrieve relevant information from both short-term and long-term memory systems. The retrieval process is based on similarity scoring, where the similarity score indicates the relevance of the stored memory to the current prompt. For long-term memory, this typically involves querying a vector database, while short-term memory may be retrieved from a traditional relational database or key-value store. After retrieving the relevant memories from both systems, the data is fed into a memory management module. In this example, we implement a simple memory management mechanism using a script within SnapLogic. - Memory Management

The memory management module employs a sliding window technique, which is a straightforward yet effective way to manage memory. As new memory is added, older memories gradually fade out until they are removed from the memory stack. This ensures that the AI retains the most recent and relevant information while discarding outdated or less useful memories. The sliding window mechanism prioritizes newer or more relevant memories, placing them at the top of the memory stack, while older memories are pushed out over time. - Generating the Final Prompt and Interacting with the LLM

Once the memory management module has constructed the full context by combining short-term and long-term memory, the system generates the final prompt. This prompt is then sent to the language model for processing. In this case, we use AWS Claude through the Message API as the large language model (LLM) to generate a response based on the provided context. - Updating Memory

Upon receiving a response from the LLM, the workflow proceeds to update both short-term and long-term memory systems to ensure continuity and relevance in future interactions:- Long-Term Memory: The long-term memory is refreshed by associating the original prompt with the LLM's response. In this context, the query key corresponds to the initial prompt, while the value is the response generated by the model. This update enables the system to store pertinent knowledge that can be accessed during future interactions, allowing for more informed and contextually aware responses over time.

- Short-Term Memory: The short-term memory is updated by appending the LLM's response to the most recent memory stack. This process ensures that the immediate context of the current conversation is maintained, allowing for seamless transitions and consistency in subsequent interactions within the session.

This example demonstrates how SnapLogic can be effectively used to manage memory cognition in AI applications. By integrating with databases and leveraging SnapLogic’s powerful workflow automation, we can create an intelligent memory management system that handles both short-term and long-term memory. The sliding window mechanism ensures that the AI remains contextually aware while avoiding memory overload, and AWS Claude provides the processing power to generate responses based on rich contextual understanding. This approach offers a scalable and flexible solution for managing memory cognition in AI-driven workflows.

- Labels:

-

Generative AI