- SnapLogic - Integration Nation

- Designing and Running Pipelines

- Re: File Splitter

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

File Splitter

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-07-2019 05:57 AM

Team,

I have a requirement to read a file and split the file dynamically based on file size into N number files to be written on AWS S3. Example: sales.txt → sales1.txt, sales2.txt, sales3.txt etc…, based on total records in a source file. Do we know if we have an easy way to solve it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-07-2019 12:33 PM

This seems like a fun one to experiment with… you state “based on the total records”, does this mean you need to identify the number of records and split them evenly among a known file count, or do you have a max amount of records per file and you will be writing the max number records out to an unknown file count… I was thinking of adding a mapper that provides a row number, create a router where rows <= max rowcount goes down one output to a file and the rest goes out another output that calls the pipeline again. Where the process would start all over with fewer records and continue until no records in the file. I am thinking there would be a file counter passed that is incremented as well so you could get file1, file2 etc. Never tried but I think its worth a shot

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-07-2019 12:59 PM

Thank you. My objective is to, read total records and see if I can split them into multiple files dynamically without re-reading the data by passing per file record count like 1000 as a variable. Need to think logic, how I can read 50K records file and get a counter of 5K to pass this counter variable to dynamic file name at run time. Thinking towards similar lines but though of checking if any easy way / setting to achieve this functionality.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-08-2019 03:04 AM

Hi Srini,

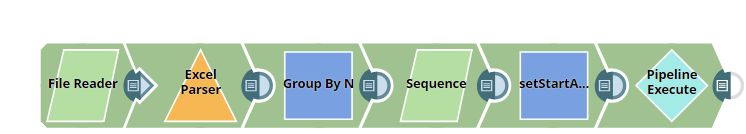

I think we can split records based on group by n snap.

value of group by size should be equal to number of records per file.

attaching pipeline that might help you to achieve what you are trying to achieve.

testFileSpliter_2019_02_08 (1).slp (12.5 KB)

writeFile_2019_02_08.slp (8.1 KB)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-08-2019 05:48 AM

Thank you Ajay. I will take a look but seems like it will address my issue.

-Srini

- How to read .xlsb file in Designing and Running Pipelines

- split target csv file into more smaller CSV's in Designing and Running Pipelines

- Parsing CSV file in Designing and Running Pipelines

- How to PGPEncrypt and PGPSign but on the same level of the packet in Designing and Running Pipelines

- Best Approach for Handling CSV Import with Truncate in Pipeline: Ensuring Correct Execution Order in Designing and Running Pipelines