- SnapLogic - Integration Nation

- Designing and Running Pipelines

- Re: How to pass a variable along the pipeline?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to pass a variable along the pipeline?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-17-2019 01:00 PM

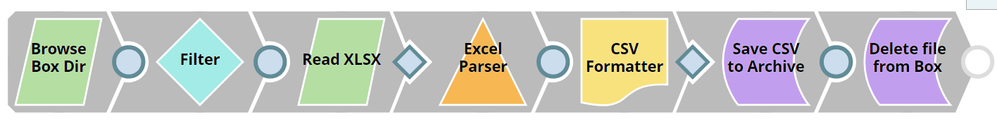

I have a pipeline that looks like this:

The Snaps are:

- List all files in a given Box directory

- Filter the files according to a mask

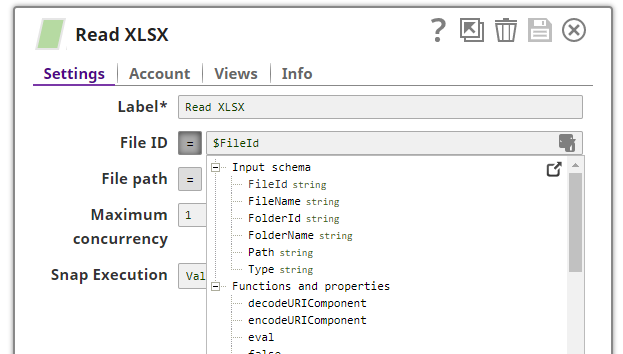

- Read the matching Excel file into the pipeline

- Convert the first Worksheet to CSV

- Write a CSV file to an archive folder in Box

- Delete the original Excel file

Everything is working up to step 5, where I need to access the output variables of step 2 again, the FileName is needed to create the matching file name in the archive folder (except this time with a .csv extension) and then the FileId is needed in step 6 in order to delete the Excel file.

The business reason behind doing this is that the file coming in to the Box folder will have a date prefix at the front of it. There is no set frequency for this file, it is practically arriving at random intervals. Therefore, I have no way to hard code the actual file name into the Pipeline and must dynamically check for a new file every day.

How can I store the output fields from step 2 somewhere in memory so that the final two Snaps in the pipeline can access them?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-17-2019 02:27 PM

Note that you are combining ALL of the Excel files found in the directory into a single CSV file. Is that really your intention here?

You’ll need to move part of the pipeline into a child pipeline and pass the file name as a parameter to the child. I’m going to guess you probably don’t want to combine all of the Excel files into one file, but rather have one CSV file per input file. Using a child pipeline will fix that issue as well.

So, I would move the “Read XLSX” and following snaps into the child pipeline and add a filename parameter. Then you can place a PipelineExecute snap after the Filter to kick off the child and pass down the file name from document output by the Box Directory Browser.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-17-2019 02:40 PM

Thanks for the quick reply.

No, the mask applied picks up only one file. I understand that I might need to handle multiple files in future, but that’s unrelated to my question here.

Just to clarify what you’re saying here, the only way to accomplish this is with a Child Pipeline and a PipelineExecute Snap. There is no way to pass the variable “up” into the Pipeline’s “session”.

Is that correct?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-17-2019 02:45 PM

Correct, there is no way to pass a variable “up”. To elaborate on that a bit, the snaps all run in parallel, so there would be a race between the snap that is passing the variable “up” and the snap that is trying to read the variable. For example, if there were two files coming in, the Write snap might see the first file name in one execution and the second file name in another execution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-17-2019 02:53 PM

I can see how that would happen, but could that not be controlled with a ForEach Snap that serializes the processing?

The use case you are thinking of would require a child pipeline due to the race condition, however it is not a requirement for the pipeline I am developing as (a) there will only be one file, and (b) it is acceptable to stop processing and throw an error if there is more than one file.

- Error regarding "Maximum allowed pipeline parameters" in Designing and Running Pipelines

- ELT Load Snap Failure in Designing and Running Pipelines

- split target csv file into more smaller CSV's in Designing and Running Pipelines

- Http Client Snap vs REST Post snap in Designing and Running Pipelines

- SQS Consumer snap is ready all message at pipeline startup in Snap Packs