- SnapLogic - Integration Nation

- Designing and Running Pipelines

- I have run into a problem. Is there a way to find ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

I have run into a problem. Is there a way to find out what is causing this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-28-2018 01:46 PM

CSV Parser

8% 5.8MB/s

input0 1190478026 1 35MB/s 0.0 Doc/s

output0 0 5774472 8.0K Doc/s

NEW CSV CONVERTER

55% 484MB/s

input0 0 5774472 12K Doc/s

output0 0 5774471 8.1K Doc/s

SORT_OLD_INPUT

8% 18MB/s

input0 0 5774471 7.2K Doc/s

output0 0 3906382 1.7K Doc/s

Pipeline is no longer running

Reason:

Lost contact with Snaplex node while the pipeline was running

It LOOKS like the sort is going VERY slow, and it eventually loses contact and dies, but I have no way to be sure.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-29-2018 09:52 AM

Sounds like the pipeline is consuming a lot of memory and overloading the node. You might want to contact support to get extra assistance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-29-2018 11:29 AM

So it does the whole sort in memory? Yeah, that process is going to consume a lot of memory if it does the whole sort in memory. If it were 100% efficient, it would take about 4GB, but sorts aren’t 100% efficient, so it could be more like 6GB, or even more, just for the sort. That is probably about 9% of the space on most ETL systems I have used. I figured it would offload stuff to temporary files or something similar.

The ZIP files it is reading from are another 0.5GB.

Ironically, I did that to save time, on later processing for tests, but the customer wanted me to end up with full inserts, and that requires reducing one file to almost nothing, so the size is cut in half, so I guess this means THAT might work. But that is just for now.

Is there a way to do a sort on the drive, rather than memory? A lot of systems today sort data in chunks, write that to files, and then do an ordered merge. It is still pretty fast, and ends up with ordered data, but can work on systems having only a small amount of memory. That used to be a BIG concern. It isn’t so big now, but on a shared system, or one managed by another, it could be a nuisance. Otherwise, maybe I will have to check if we can add a database into the mix.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-30-2018 12:35 PM

The Sort Snap uses an external merge sort algorithm like you described. It will sort a chunk of documents in memory, write the sorted chunk to disk, and finally merge all of the chunks. The size of the chunk is controlled by the Sort Snap “Maximum Memory %” property. For example, if the JVM max heap size is 10GB and Maximum Memory percentage is set to 10% then documents will be separated into 1GB chunks. Note, 1GB is not the raw input data size. It includes Java overhead associated with objects such as pointers.

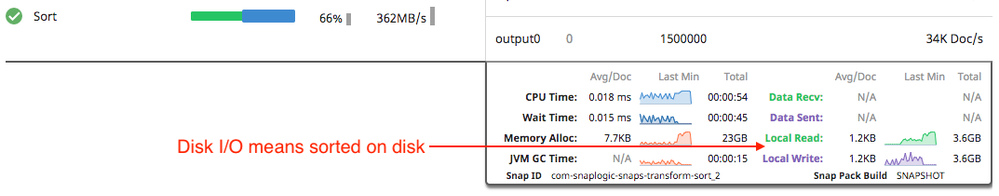

If the Sort snap Maximum Memory percentage property is too high it will result in the total input being sorted in memory. This can be confirmed by looking at the pipeline execution stats. If you do not see significant disk I/O for the Sort snap then it was sorted in memory.

If the Sort Snap is the culprit, lowering this property value could help. The trade off with lowering the value is it results in more chunks and therefore more intermediate merge steps.

- Truncation error with MS SQL Bulk Load snap, column reported not issue, which column is real issue? in Designing and Running Pipelines

- Convert array with objects into object with key/value pairs in Designing and Running Pipelines

- Order of reponses when using pagination with http client in Designing and Running Pipelines

- Hyperlink URL Error in HTML/XML Generator in Designing and Running Pipelines

- AWS SQS Acknowledgment getting failed with Token Timeout Error in Designing and Running Pipelines