- SnapLogic - Integration Nation

- Designing and Running Pipelines

- Re: Snowflake Bulk Load

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Snowflake Bulk Load

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-24-2020 11:04 PM

Hi,

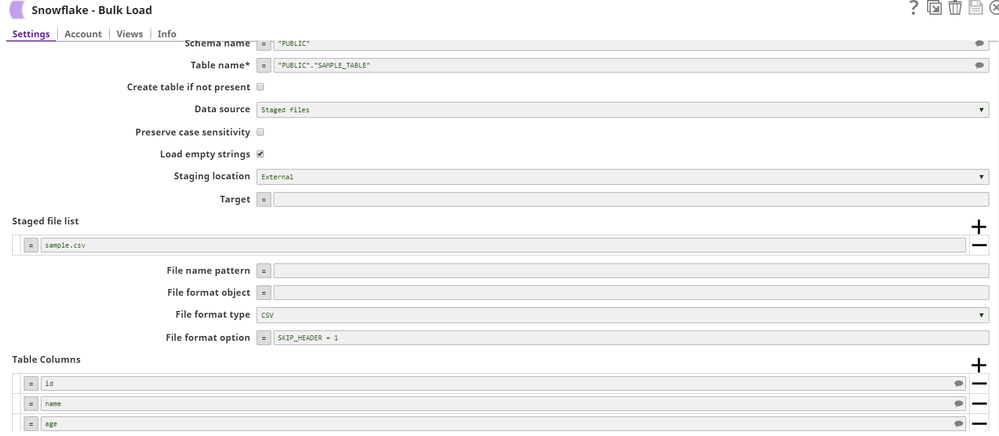

I am trying to use Snowflake bulk load snap to read data from external S3 location and load into the snowflake table.

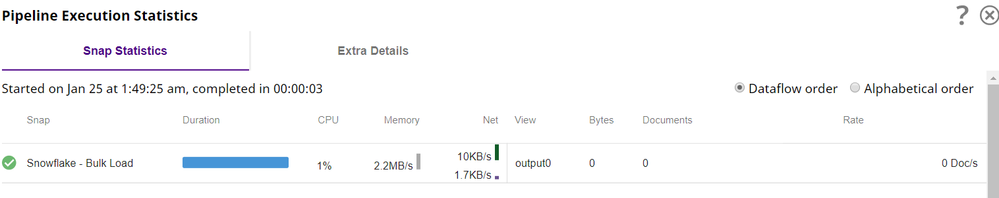

here the challenge is that. Under the Snowflake account, I have mentioned the external bucket name and provided keys. but when am running the pipeline I see that 0 records loaded.

Note:- Snowflake is on the AWS account, data is on another AWS account. If I use the S3 file reader snap. am able to see the data

Can anyone help me with this?

Error:-

Bulk load snap settings:-

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-04-2020 06:02 PM

thank you

I am able to fix it. What if I want to use External staging file (S3) instead of S3 file reader

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-04-2020 06:28 PM

As long as you make sure that file contains the column headers in the same case as present in the Snowflake Database table, it should not be a problem in most cases.

In your case, you have said that the staged Data and Snowflake are on different AWS accounts. We have to figure out if there are any gaps there.

In the Snowflake account, did you give the Amazon S3 credentials of the staged data?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-04-2020 07:41 PM

Yes, I am using same credentials while loading data from S3 to snowflake table using “Input view” in bulk load.

Note:- I have changed the Headers to All Caps in the input file.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-07-2020 10:38 AM

@venkat

The reason why the pipeline looked successful and no commands were executed on Snowflake is because the input view of the Bulk Load Snap was open but it did not contain any input documents. If you add a dummy JSON Generator with some values, the pipeline will be executed. Unfortunately, the minimum number of input views for the Snowflake Bulk Load snap is 1, which is the reason for this workaround. There is an internal ticket to make the minimum input view as 0.

Also, in the Snowflake Account, the s3 folder name should just contain the name of the folder and not the full path with “http”. For example, if test is your folder, just provide the value as “test” in folder

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-07-2020 05:56 PM

It worked, thank you

- Truncation error with MS SQL Bulk Load snap, column reported not issue, which column is real issue? in Designing and Running Pipelines

- Giving Thanks with Our Latest November Product Release! in Release Notes and Announcements

- SQL Server - Bulk Load in Designing and Running Pipelines

- How to output the SQL statement that was executed in Snowflake - Execute pipe? in Designing and Running Pipelines

- Creating unique ID's and repeating in Designing and Running Pipelines