- SnapLogic - Integration Nation

- Designing and Running Pipelines

- Re: Transformation rules inside mapper snap not ex...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

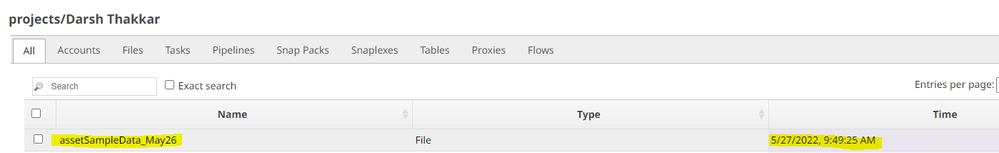

05-27-2022 07:01 AM

Hi Team,

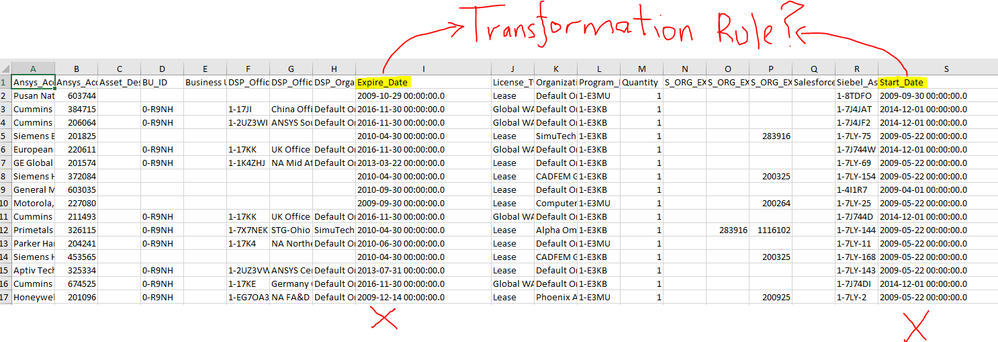

I have found one of the transformational rules applied on “date field” not getting exported to the final output file (i.e. excel file) when the pipeline has been executed.

Output preview on the snap does show the right data (i.e. expected one), pipeline validation also shows the right data however the file which gets generated after pipeline execution doesn’t get those details.

I have tried the following:

- validated pipeline via

shift+validate - tweak the transformation rule, save the pipeline and check the output in the preview

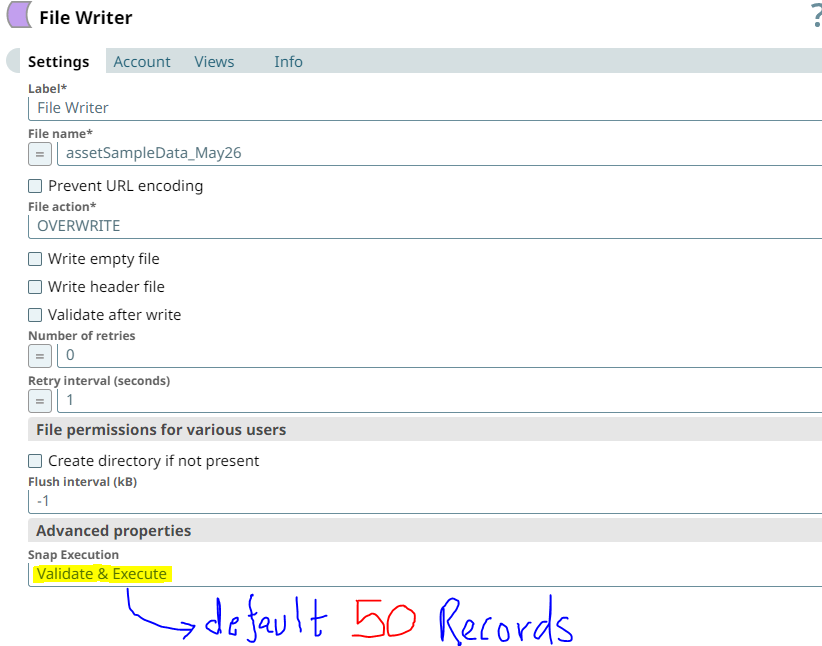

- validated the preview file (with default 50 records)

- deleted the file generated by “validate & execute” snap on File writer with default 50 records so that new instance of data comes in

- executed the pipeline with different file name multiple times

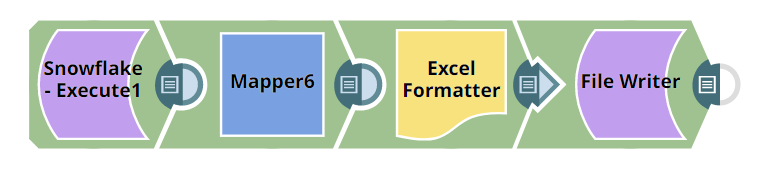

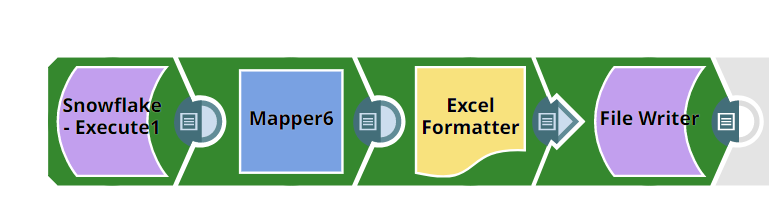

It’s a simple pipeline consisting of

Snowflake Execute --> Mapper --> Excel Formatter --> File Writer

Sharing some screenshots to help it understand better:

(1) Pipeline with 4 snaps

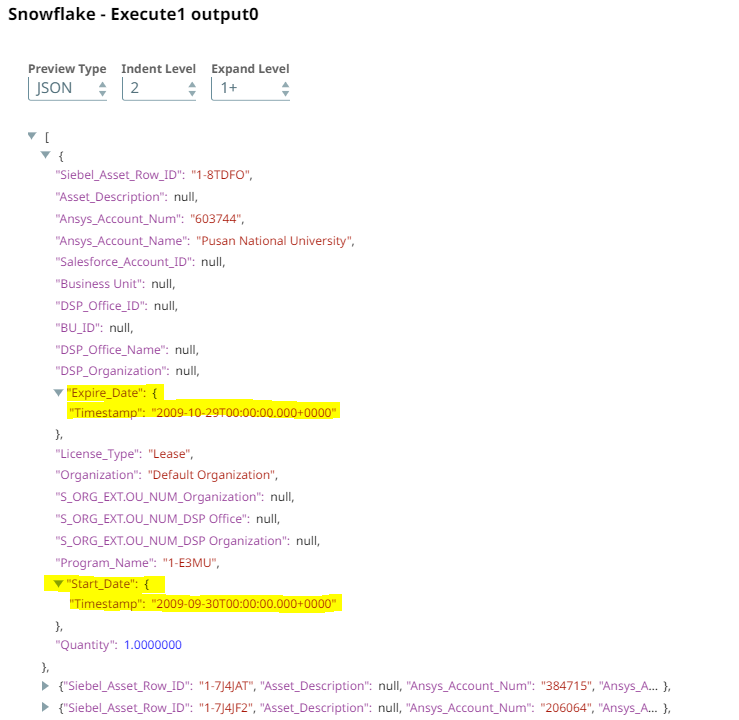

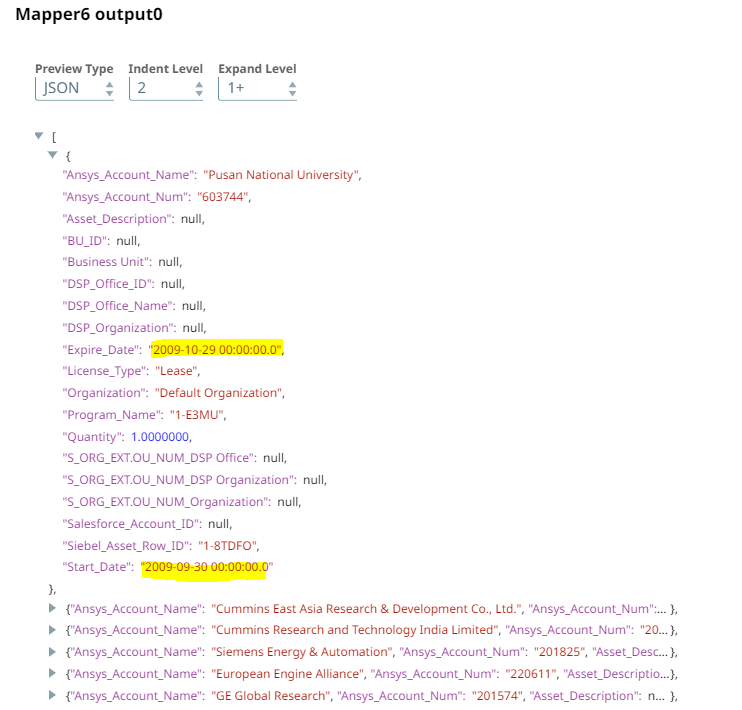

(2) Snowflake execute output preview in JSON format (highlighted yellow fields needs transformation)

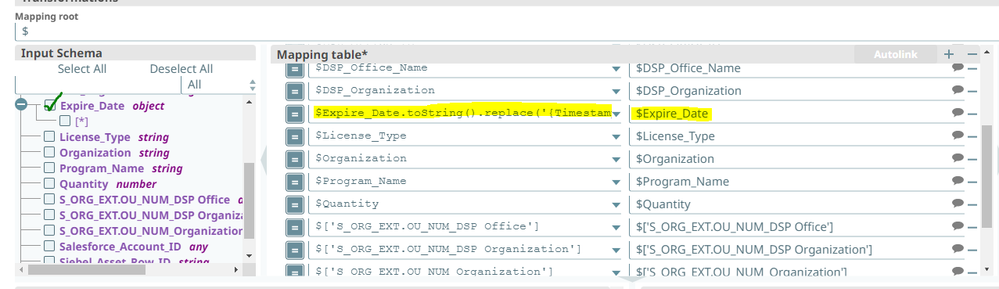

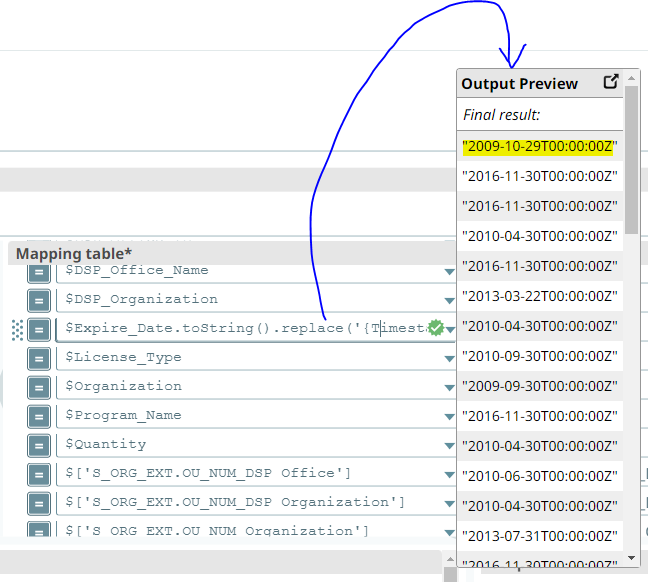

(3) Transformation Rules on Date fields

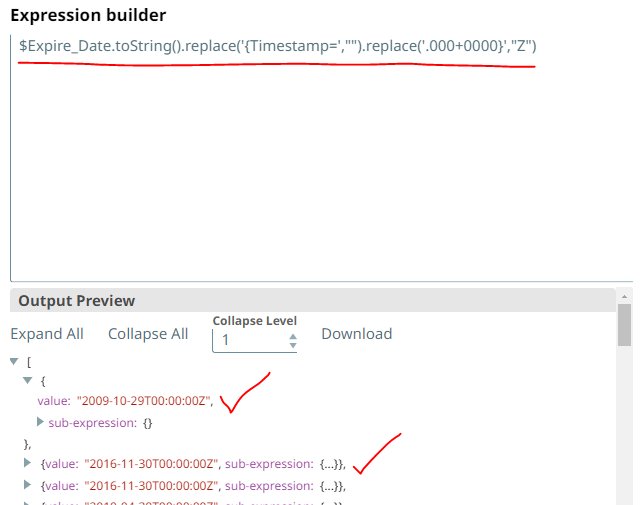

(4) Transformation Rule: $Expire_Date.toString().replace('{Timestamp=',"").replace('.000+0000}',"Z")

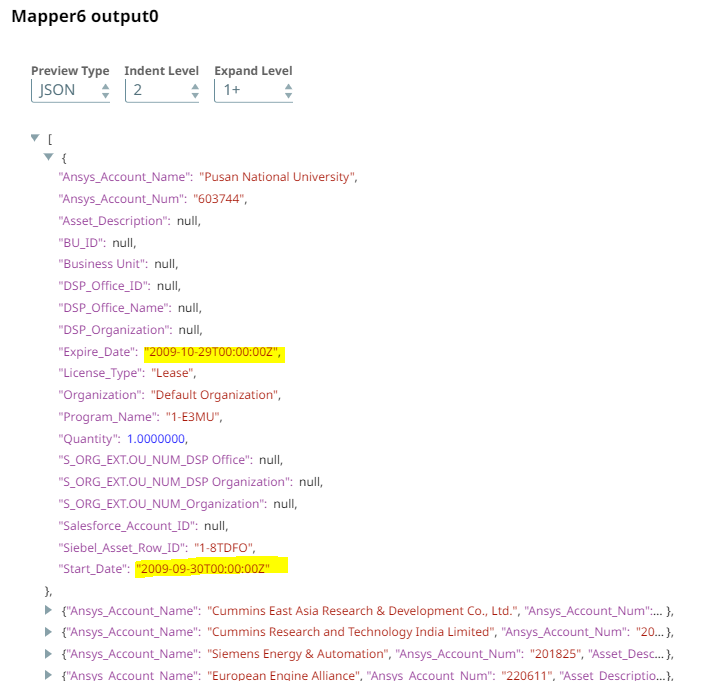

(5) Output preview after transformational rule

(6) Mapper output preview shows different data

→ I’ve observed certain times that the transformation rules won’t work so I go inside the mapper, modify the rule a bit, bring it back to default rule so that I can save it (save is not enabled if there is no change thus this workaround)

(7) As shift+validate didn’t work, had to change the transformation rule, save it and then this is the output preview on mapper snap:

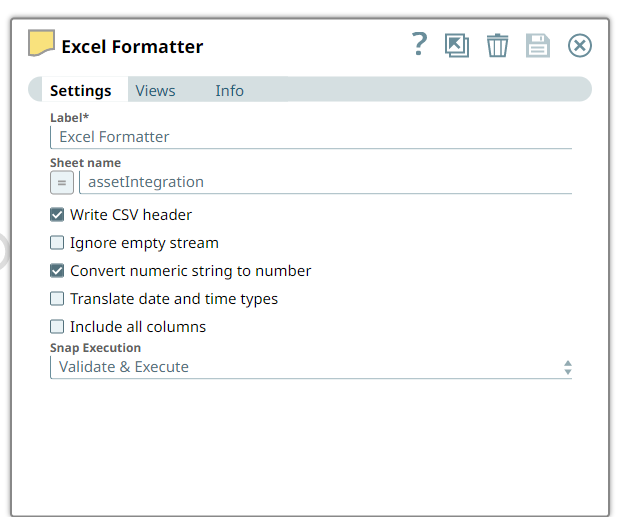

(8) Settings under excel formatter snap for reference:

(9) Settings under File Writer snap for reference:

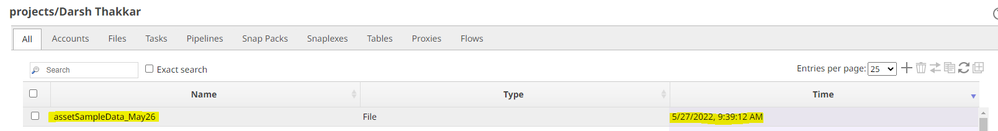

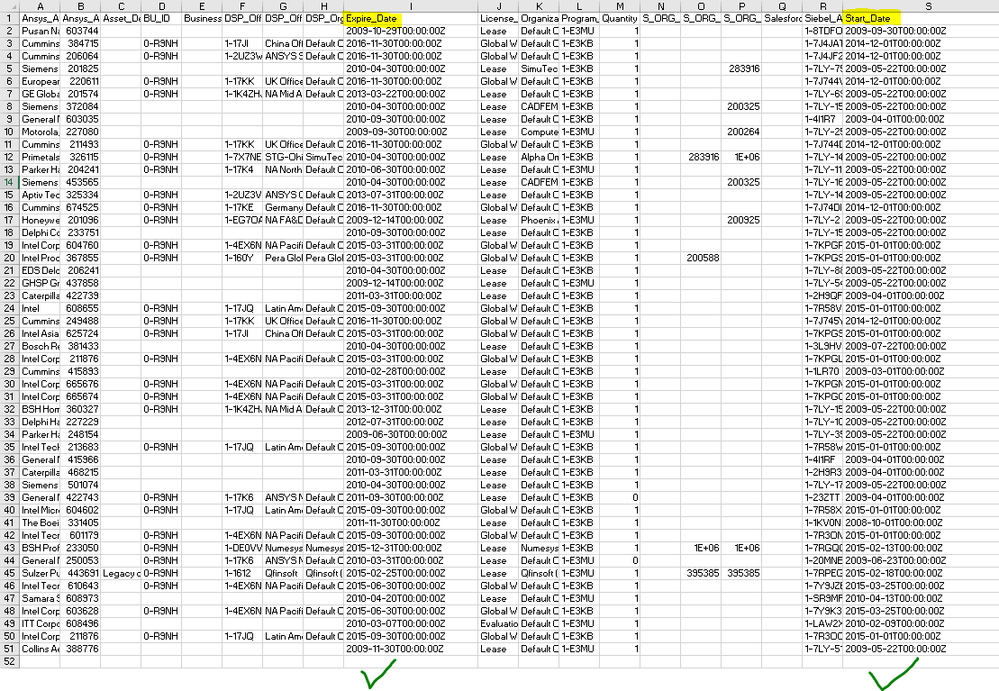

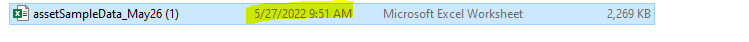

(10) File generated with default 50 records due to validate & execute on File writer snap

(11) Downloaded the file that gets generated in step 10 and below is the output (expected)

(12) Executed Pipeline

(13) Output File of Executed pipeline

I’ve seen this happening numerous times particularly to fields containing timestamp in other pipelines too, am I doing something wrong over here? Any settings that needs to be changed either in Mapper, Excel Formatter or File Writer snap?

Thanking in advance for your valuable time and suggestions on this one.

Best Regards,

DT

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-30-2022 11:42 AM

The Snowflake snaps have a setting called Handle Timestamp and Date Time Data. Unfortunately, its default setting, Default Date Time format in UTC Time Zone, has less-than-ideal behavior. The object type of the timestamp objects is java.sql.Timestamp, which will result in the odd inconsistencies you’re seeing between validation vs execution, and it’s also not a very usable type on the SnapLogic expression language.

I suggest changing this setting to the other option, SnapLogic Date Time format in Regional Time Zone. This will convert the timestamps to the type org.joda.time.DateTime, the type that the SnapLogic EL functions are designed to deal with.

This option will also produce a DateTime with the default time zone of the plex node, which can complicate things if the node’s default timezone isn’t UTC. But based on what you’ve shown, your node’s default timezone is UTC, so this shouldn’t be a problem for you, fortunately.

After changing that setting, try using an expression like $Expire_Date.toLocaleDateTimeString({format: "yyyy-MM-dd'T'HH:mm:ss'Z'"}).

Hope that helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-31-2022 09:25 AM

Did adding the $ at the beginning of your expression help?

Allow me to explain a bit more about validation vs execution. This will be generally useful in your use of SnapLogic.

When you validate a pipeline, the SnapLogic platform will cache the preview output of each snap. On the next “regular” validation, it considers which snaps have been modified since the last validation, and will reuse the cached output of all snaps up until the first snap that was modified. It does this as an optimization to avoid re-executing potentially costly or time-consuming operations like Snowflake Execute. So when you’re modifying the Mapper and a new validation occurs, it’s operating on the cached preview data from the Snowflake Execute.

Usually, that invisible use of cached data during validation is a good thing. It makes designing pipelines faster by re-running only the modified snaps and the snaps downstream from those. Usually the results are consistent whether you’re validating with cached data or executing.

However, as you’ve witnessed, there can be subtle issues that arise from the use of cached data. This is because the caching mechanism is based on serializing the preview data to JSON format and then deserializing it when the cached data is used. JSON only has a few basic data types, which don’t include types like dates and timestamps. So if an output document uses objects of types that don’t correspond to native JSON types, SnapLogic will represent those objects using a custom JSON representation. For well-supported types like Joda DateTime, it will use a representation like this where the type key begins with “snaptype”:

{"_snaptype_datetime": "2022-03-14T15:24:24.974 -06:00"}

When the platform deserializes the JSON representation of the output document and encounters that object, it’s able to correctly restore a Joda DateTime object identical to the one that was serialized, so the downstream snaps will behave consistently regardless of whether cached data is used.

Unfortunately, some object types like java.sql.Timestamp are not well-supported by the serialization/deserialization of the caching mechanism, resulting in inconsistent behavior. This is the type used for timestamps when the Snowflake Execute’s “Handle…” setting is “Default Date Time Format…”. The serialized representation looks like this:

{"Timestamp": "2022-03-14T21:24:24.974+0000"}

Unfortunately, the platform does not know how to deserialize this cached representation to restore an identical java.sql.Timestamp object that was serialized. Instead, it will deserialize this as a Map containing a single key/value pair, exactly as shown in the JSON. So your transformations that depended on the presence of “Timestamp=” in the toString() representation or on the .Timestamp sub-object were relying on the mis-deserialized cached preview data and would only work in that context.

You can force all snaps to re-run during a validation (avoiding any cached data) by either holding Shift as you click the validate icon, or by clicking the Retry link next to “Validation completed” if that link is displayed. But it’s better to avoid the issue by configuring the snap to use the data types that are well-supported for caching.

Hope that helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-31-2022 10:01 AM

This is super helpful, thanks a ton @ptaylor for sharing this information. I did try with Shift+Validate but the results were inconsistent. That was my first comment while raising this question:

I believe it’s in the best interest to use the data types that are supported for caching as the results are consistent with this approach.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-31-2022 09:53 AM

It worked, thanks a ton @ptaylor for your solution and attention to detail.

A quick question on which rule would you recommend to use as both the below-mentioned are working as expected (SnapLogic Date Time format in Regional Time Zone has been selected under snap Settings)

(1) $Expire_Date.toString().replace('{Timestamp=',"").replace('.000Z',"Z")

(2) $Expire_Date.toLocaleDateTimeString({format: "yyyy-MM-dd'T'HH:mm:ss'Z'"})

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-31-2022 10:12 AM

That expression works because of the second replace. You don’t actually need the first replace because the string won’t contain Timestamp= as I explained. So this simplified expression would also work:

$Expire_Date.toString().replace('.000Z',"Z")

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-31-2022 10:42 AM

Correct!

My bad for writing multiple .replace statement as when I saw the preview inside the mapper snap against that field (i.e. $Start_Date and $End_Date), it showed me {Timestamp=' (I believe this is exactly what you explained in detail regarding caching)

@ptaylor: Would it be best practice to use $Expire_Date.toString().replace('.000Z',"Z") or $Expire_Date.toLocaleDateTimeString({format: "yyyy-MM-dd'T'HH:mm:ss'Z'"}) or both of the afore-mentioned?

Trying to learn the best practice so that I’m a good snapLogic developer and I can share the same knowledge to others!

- How to get value in different column with same field name in Json output in Designing and Running Pipelines

- Transforming JSON Output to Payload for Slack App in Designing and Running Pipelines

- Creating Nested Json for REST API in Designing and Running Pipelines

- Suffixing date with date format abbreviation (e.g. EST, UTC, IST, etc.) in Designing and Running Pipelines

- Change array to object and extract certain characters from it in Designing and Running Pipelines