- SnapLogic - Integration Nation

- Designing and Running Pipelines

- Re: Unable to write salesforce data to s3 in parqu...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-29-2023 08:00 AM

I Using saleforce read snap to read data and write to s3 in parquet format but getting error due to 'picklist' data type which is not compatible to parquet. How to handle such datat types problem

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-04-2023 01:56 AM

Hi @Roger667

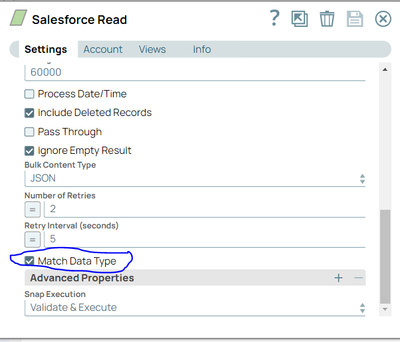

Could you please check your SF read snap and make sure the 'Match datatype' is checked.

Thanks !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-01-2023 06:54 AM - edited 10-01-2023 07:00 AM

Hi @Roger667 ,

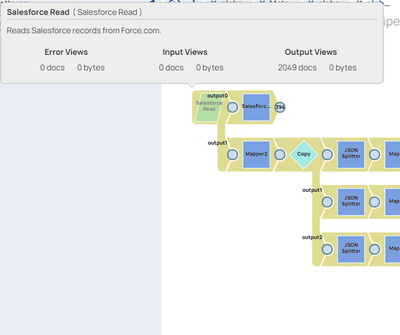

you need to enable the second output view of salesforce read snap (schema output) and get the metadata of the columns, convert them to the datatypes compatible with parquet writer and then pass the metadata to the second input view of parquet writer (enable second input view, schema input).

attaching sample pipeline and file used to convert the schema.

Thanks,

Mani Chandana Chalasani

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-02-2023 04:21 AM - edited 10-02-2023 03:36 PM

Thank you for your response. I successfully executed the SFDC_Parquet_Writer pipeline; however, the reader snap becomes unresponsive after processing a maximum of 2049 documents. It continues to operate indefinitely.

I tried breaking it down and this issue comes only when i add the parquet writer at the end

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-03-2023 01:29 AM

Hi @manichandana_ch , @SpiroTaleski

Is this issue related to salesforce read snap due to some api related issue or at the parquet writer's end?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-03-2023 01:40 AM - edited 10-03-2023 01:40 AM

Hi @Roger667

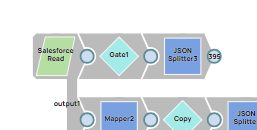

please add a gate snap and JSON splitter to split the incoming data from output of gate snap. please remove the salesforce read mapper and connect the output of JSON splitter to parquet writer data input. In JSON splitter give this - jsonPath($, "input0[*]")

Here is the screenshot of what changes are done. please connect 395 port to parquet writer data input.

Issue is the design of pipeline. sf read snap is starting to process data records to parquet writer data input immediately but the schema is not getting processed from the sf read snap, seems like it will process the metadata after all the data is read and processed. But the data is not being processed further because parquet writer is not getting schema to start writing as it's waiting for schema details, it's like an interlock. when gate snap is added to the sf read data output gate snap, it accumulates all input data before it proceeds further so that before passing to parquet writer, data is accumulated at gate snap and then metadata is identified at schema output. Then it starts writing to parquet writer.

Thanks & Regards,

Mani Chandana Chalasani

- Salesforce Read - Where Clause Syntax in Designing and Running Pipelines

- Slicing Data from JSON in Designing and Running Pipelines

- Issue using mapValues and mapKeys functions in Mapper Snap in Designing and Running Pipelines

- Unable to write salesforce data to s3 in parquet format in Designing and Running Pipelines

- PostgreSQL - Bulk Load with schema provided in Designing and Running Pipelines