- SnapLogic - Integration Nation

- Prior Entries

- 2021 Enterprise Automation Award Nomination: CBG: ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

2021 Enterprise Automation Award Nomination: CBG: Delivering the pharmacy experience via data automation

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-12-2021 06:07 PM - last edited on 05-13-2024 04:25 PM by dmiller

What were the underlying reasons or business implications for the need to automate business processes? Describe the business and technical challenges the company had.

Our primary reason and business need to automate data was to support data ingress/egress integrations for a new healthcare startup. Our startup vision is to: “build and support myriad healthcare entities through acclaimed clinical, operational and digital expertise and solutions to accelerate proven outcomes.” Our mission is: “to partner with forward-thinking entities who strive for excellence in delivering the pharmacy experience and provide a pathway to PBM ownership.” To do that, we had to go live with our startup on 5/1/2020.

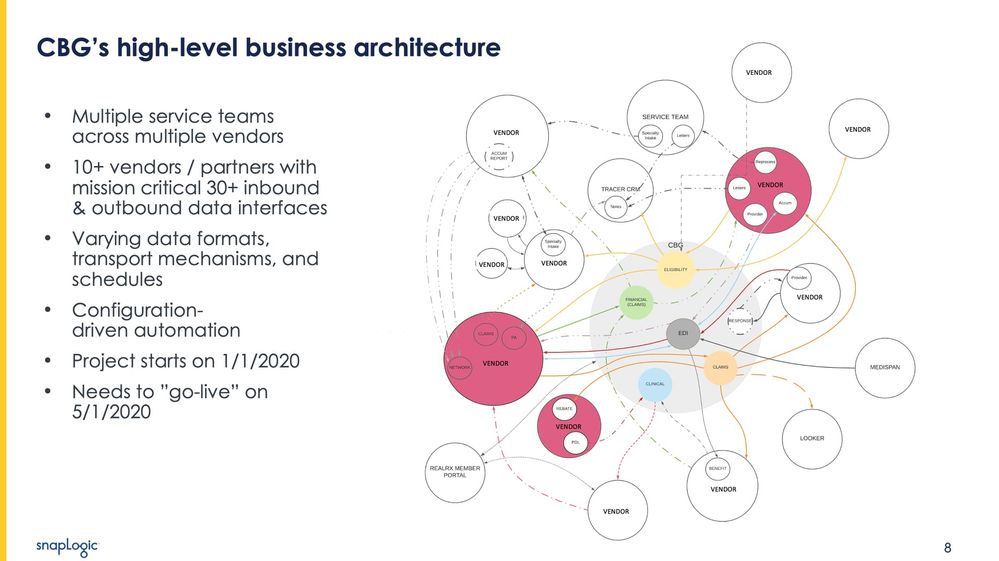

Our primary challenge was our timeline. We were not able to start working on data integration until 1/1/2020, so we only had 4 months to go-live. Our secondary challenges were numerous:

- Budget constraints required very lean technologist team

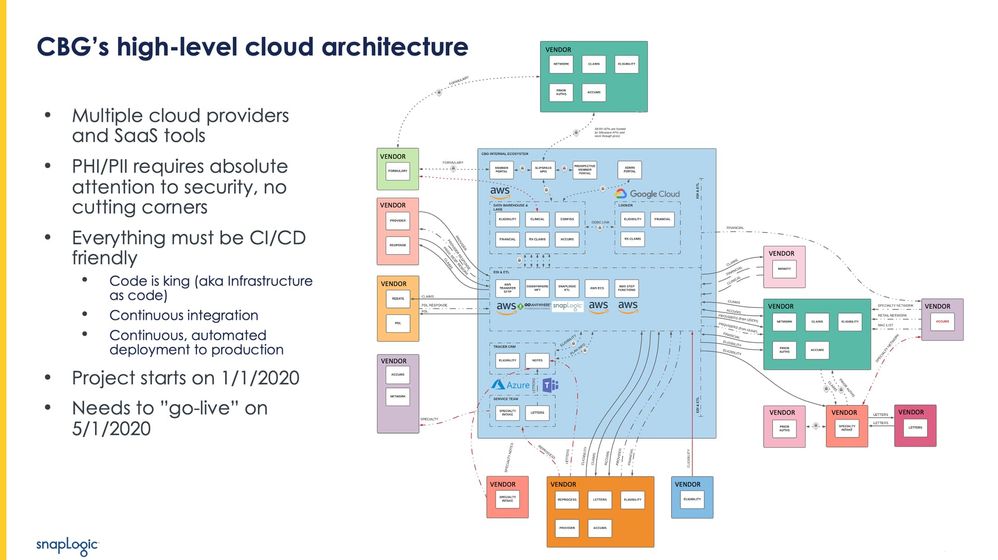

- Connect multiple cloud providers and SaaS tools

- PHI/PII requires absolute attention to security

- Everything must be CI/CD friendly

- Multiple service teams across multiple vendors to integrate

- 10+ vendors / partners with mission critical data

- 30+ inbound & outbound data interfaces

- Varying data formats, transport mechanisms, and schedules

- Configuration-driven automation

Working through these challenges, we propelled our startup forward with automated business and systems processes across the enterprise.

Describe your automation use case(s) and business process(es).

Our automation use-cases, driven by key business processes, were that we needed to process multiple eligibility, claim, response, related pharmaceutical files daily and be able to scale to exponential volumes quickly in the future as new clients came on. Our business processes required integration of multiple service teams across multiple vendors to integrate, 10+ vendors / partners with mission critical data, 30+ inbound & outbound data interfaces, and we needed to be able to accept varying data formats, transport mechanisms, and schedules.

Describe how you implemented your automations and processes. Include details such as what SnapLogic features and Snaps were used to build out the automations and processes.

We didn’t have a lot of experience using SnapLogic, but we did understand data and how data integration works. To implement our solution against our very aggressive timeline, we learned SnapLogic, designed our data architecture, and gathered business requirements in parallel. We built a configuration-driven architecture to support our flexible approach to harnessing low-code/no-code delivery. We relied heavily on a parent-child pipeline relationship to minimize how many tasks needed to be scheduled through the SnapLogic interface. We built all our data integrations in AWS and SnapLogic and accomplished 95% of our goals using the core SnapPacks provided by SnapLogic. We also used the AWS SnapPacks to easily integrate with AWS S3, SQS, Aurora, and other services.

Our implementation allowed us to carefully implement ETL/ELT capabilities and resulted in our entire solution being Infrastructure as Code. Our implementation resulted in fewer lines of code required to quickly deliver business requirements, ability to securely connect to anything, everything via simple API mechanisms, and we utilized NoOps for Cloudplex due to not having to manage any hardware or install software locally.

The flexibility of our current solution allows for our future solutions to include: Advanced data features (AI, ML, big data), hyperautomation, and low/no-code citizen development.

What were the business results after executing the strategy? Include any measurable metrics (ie. productivity improvement, speed improvement, % reduced manual inefficiencies, etc.)

Our business results were vast. Most importantly, we were able to open our doors to our client as expected on 5/1/2020. In addition, we ended up with some stunning metrics that highlight the volume, resiliency, and flexibility of the data integrations we built:

- 90+ pipelines in production and 30+ integrations with partners & vendors

- 99% successful pipeline executions for ETL/ELT, APIs, and Bots

- More than 2 TB of data & ~575K objects have flowed through SnapLogic in production

- 99.99% CloudPlex platform uptime for core data pipelines & services

- 1000+ ETL, API, and Bot pipeline executions per day with ~3secs round trips

- All pipelines ready by 4/15/2020 with 2 weeks for additional testing

- Data & integration engineer cycle times are ~2days on average

CBG is a testament to what a small but mighty team can do when they leverage the power of low-code and automation.

Who was and how were they involved in building out the solution? (Please include the # of FTEs, any partners or SnapLogic professional services who were involved on the implementation) *

We had four key people involved in the building out of our solution. Bethe Price (FTE) was our COO and primary business analyst for getting requirements refined. Davis Hansen (FTE) was our single data engineer for building and architecting the data integration solution. Roger Sramkoski (SnapLogic) was our key support contact from SnapLogic that helped us through our onboarding, training, and implementation of the SnapLogic platform & technologies. Last, our cloud architect helped us work through architecture complexities.

- Labels:

-

Enterprise Automation Award

- ⋮IWConnect: Helping NTT Accelerate Delivery of IT Services to its Customers in Published Submissions

- Pace Integration: Building National Broadband Ireland's Ground-breaking Fully Integrated Broadband Sales and Management Solution in Published Submissions

- 2021 Partner Innovation Nomination: EXL: Creating a single source-of-truth in Published Submissions

- 2021 Application Innovation Award Nomination: Browns Shoes: Automating Supply Chain and Operations processes in Published Submissions

- 2021 Partner Innovation Nomination:⋮IWConnect: Automating supply chain processes in Published Submissions