- SnapLogic - Integration Nation

- Prior Entries

- Q and A with Kalyan Venkat: Timely Metrics from Va...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Q and A with Kalyan Venkat: Timely Metrics from Varied Applications While Meeting Audit Requirements

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-25-2022

05:28 PM

- last edited

a month ago

by

![]() Scott

Scott

![]()

What were the underlying reasons for the need of data?

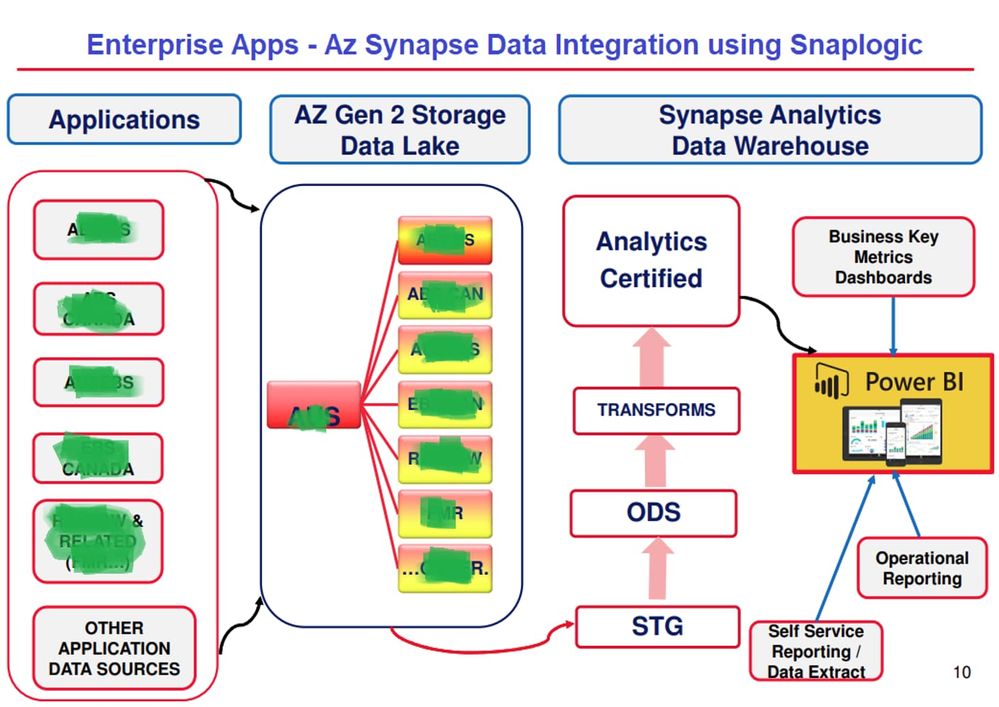

Need accurate & timely operational and strategic key business metrics from varied applications - has been challenging using multiple ETL tools, data refresh schedules, and data integration points

Describe your data strategy and how you executed the strategy.

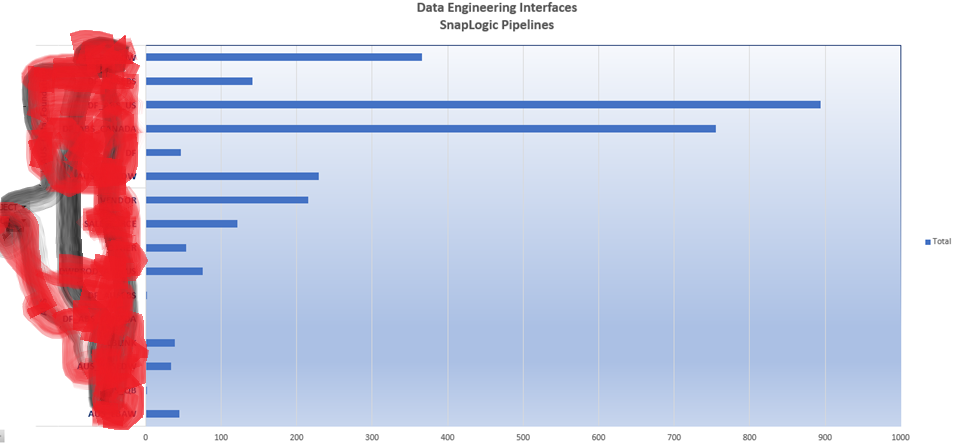

The design focuses on building patterns where 80% of data movement between Source (applications) objects and Target objects– Az Cloud Datalake is tied to executing patterns in SnapLogic. The pattern approach eliminates significant development and testing effort required towards new and updated data from source systems. The use of patterns in data movements allows for well thought of design that is required to trigger data movement only on those pattern certified objects. The data fetch process within a pattern is also designed to be codeless meaning like to like attributes between source and are dynamically mapped in the pipeline – this eliminates need to manage and maintain coding required toward querying data from source and updating target system. Example: one of the enterprise applications has 350+ tables that requires to be updated in Azure Cloud Datalake - instead of developing one to one - 350+ SnapPipelines - using a pattern framework significantly expedited development and delivery schedule - in total there were just 45 Pipelines to address Data refresh both Delta and Full load to Az Cloud Datalake. Comparing with legacy ETL solution that required One to One workflow for each source and target table refresh.

Who was and how were they involved in building out the solution?

We leveraged SnapLogic offshore Snap Development resources (2) and Internal (1) FTE to deliver Az BigData Solution

What were the business results after achieving this data strategy?

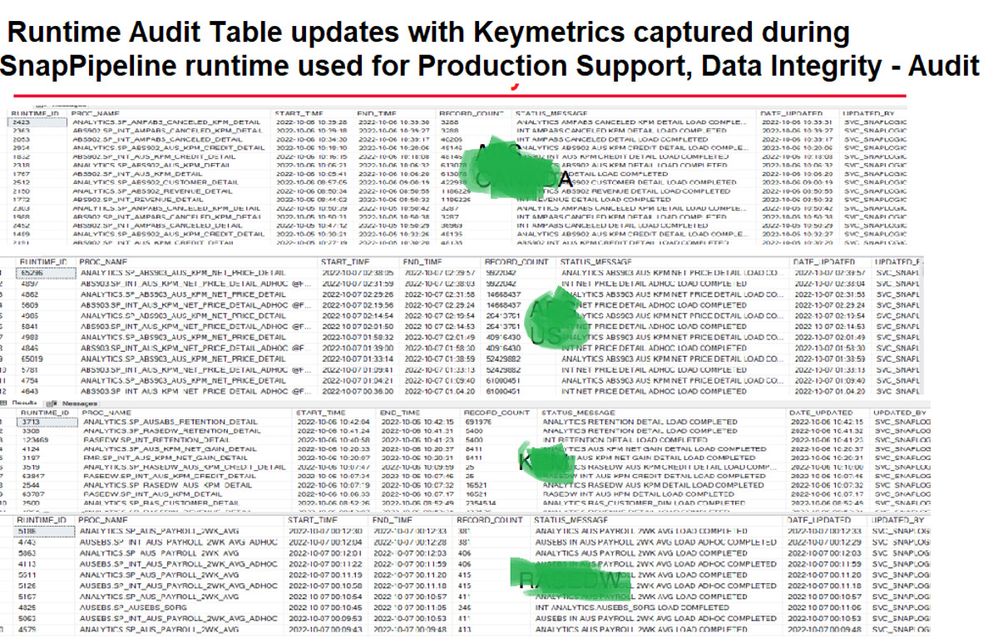

Using SnapLogic truly expedited design, build and deliver data solutions and met business needs timely. The ability to integrate data from 13+ systems (Enterprise Apps, Cloud Apps, Third-Party SAAS data and list goes on) - most importantly key data metrics are captured during Pipeline Execution, Data Orchestration, Data Ingestion and Post Process Certification - this is a key requirement from Internal Audit to ensure Data Integrity is not compromised

Anything else you would like to add?

So far we have deployed over 3000+ Snap Pipelines in production and at most we allocated a half hour per week to review any exceptions during Pipeline processing in production. This clearly demonstrates the strength of SnapLogic as a product to move tons of data between source and target and by applying well thought out design ahead greatly benefits towards building robust, and flexible Snap pipelines

Diane Miller

- Infosys: Modernising the Client's Landscape Containing 500+ Legacy Interfaces in Published Submissions

- Hampshire Trust Bank: Building a More Agile Financial Services Organization in Published Submissions

- GlobalLogic: Managing and Transforming Data in Real Time with Multiple Integrated Applications in Published Submissions

- Tyler Technologies: Building an Integration Toolkit for their Platform in Published Submissions