- SnapLogic - Integration Nation

- Designing and Running Pipelines

- Google Analytics Query Explorer Error

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Google Analytics Query Explorer Error

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-25-2021 12:57 PM

Hello,

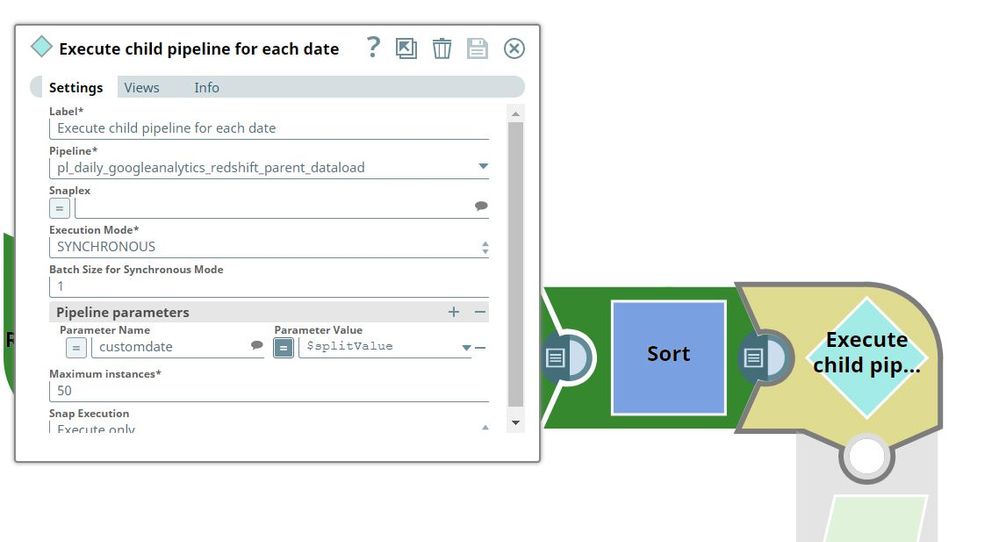

I have a “for each” snap that calls a pipeline to connect to Google Analytics query explorer and extracts the data for 20 different websites. The problem that I encounter is that when I execute the “for each” for multiple interactions, I get the following:

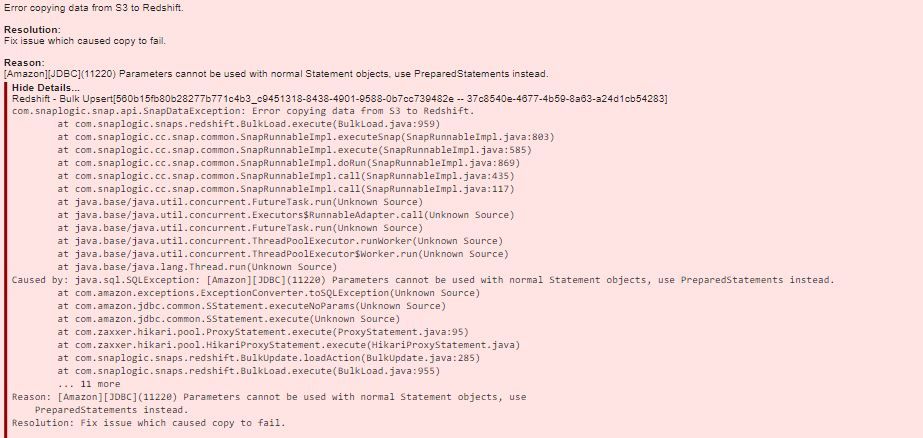

Error copying data from S3 to Redshift.

Resolution:

Fix issue which caused copy to fail.

Reason:

[Amazon]JDBC Parameters cannot be used with normal Statement objects, use PreparedStatements instead.

The error is always at a random website connection and it always happens when I execute the “for each” for more than 1 day.

Each “for each” interaction is doing 20 different connections per day for x amount of dates.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-25-2021 04:00 PM

It looks like this error can be raised by either the Redshift BulkLoad or S3Upsert snap. It seems the generated SQL has a placeholder or something that is causing it to be interpreted as a parameter in a Statement object. These snaps use both PreparedStatements and Statement objects.

A stack trace would be super helpful to investigate this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-26-2021 08:04 AM

I’m not sure if this is the stack trace that you are looking for. If not, can you lead me to where I can get it?

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-26-2021 11:38 AM

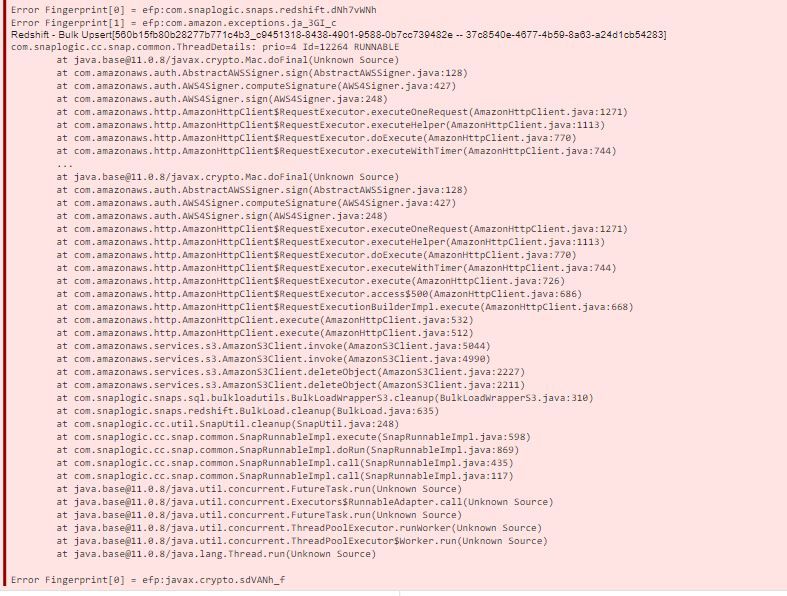

Ok, it looks like exception is getting raised from the BulkUpdate snap (which inherits from BulkLoad). It doesn’t like the INSERT SQL. A question mark (?) in the SQL will be interpreted as a SQL parameter placeholder even in a comment it seems ( [Amazon][JDBC](11220) Parameters cannot be used with normal Statement objects, use PreparedStatement... ).

This SQL is actually logged and that would tell us exactly what’s triggering this. The SQL is logged as a DEBUG message of this form:

"Running insert from temp table to target table : <INSERT-SQL>"

To get this log, we need to download the JCC logs. Unfortunately, you aren’t able to do this, however, our snap support engineers can. The logs do rollover though, but if you logged a support ticket shortly after this exception occurs, the log message would likely still be available.

If you don’t want to do this, then think about if there’s a question mark (?) somewhere that’s getting included in the INSERT SQL which is tripping up the interpretation. Obviously, you don’t control this directly, but maybe some hints with the source/attributes … I’m grasping a bit… However, the logged SQL would tell us exactly why.

- SnapLogic November 2023 Release is HERE! in Release Notes and Announcements

- SnapLogic Integration Platform November 2023 Release is now in UAT in Release Notes and Announcements

- Launched: Google Analytics 4 Snap Pack in Release Notes and Announcements

- Google Analytics snap in Designing and Running Pipelines