- SnapLogic - Integration Nation

- Designing and Running Pipelines

- Re: Task Execute Timing out

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Task Execute Timing out

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-08-2020 09:45 AM

Hi,

We are using the cloudplex version of Snaplogic. We have a pipeline built that downloads a very large weather data set. We had to build the pipeline to execute in “batches” using the Task Execute snap because the 100mm row+ download files up the temp file space if we do all in one go…

I am getting timeouts after 15 minutes and would like to either up that timeout parameter or make it so that the parent pipeline doesn’t think the task pipeline has timed out. I was told that having an “open view” in the task pipeline would keep the parent from thinking a timeout has happened but this isn’t working.

Any ideas? thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-10-2020 06:50 AM

So, you’re getting a bunch of locations, iterating through each location pulling the weather data, and then doing something with that weather data. Correct? That flow should be achievable by putting the operation to get the locations into the parent pipeline and then feeding the locations into a PipeExec with Reuse enabled and a Pool Size that is greater than one (maybe 10?). The PipeExec will then distribute the locations to the child pipelines which pull the weather data and finish processing it. You shouldn’t need to use GroupByN to do any batching with that configuration. You can play with the pool size to control how many child executions are running in parallel depending on resource usage and maybe set the Snaplex property to distribute some child executions to other nodes in the Snaplex.

If I send all 7k locations at once, the pull overwhelms node

Can you elaborate on what you mean by “overwhelms” here? What happens exactly?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-10-2020 07:13 AM

Hi,

Thanks for the insights. Yes, we tried the PipeExec with pool. The problem is that the pool redlines the node, and doesn’t distribute dynamically over the 2 nodes. We have basically figured out how to “hard force” resource loading.

We group the location data into 3 groups. We setup a pipeline parameter and we setup 3 tasks, which invoke the master pipeline with the parameter. We learned that Snaplogic takes a while to update the Open Threads count which is used for resource loading so by starting our 3 tasks 5 minutes apart (we were able to get succesfull resource loading with 3 minute, but chose 5 to be safe), we observe the system properly utilizing the nodes. When we did 2 minutes or less, the 3 tasks got assigned all to the same node.

In terms of “overwhelms”, there were severall different tests:

- All 7k locations at once. System chugged along with mem/cpu at 95%, which caused other jobs to fail. Then after about 250mm records loaded, we got an out of local storage crash

- When used pipeline exec, all the pipelines spawned and again pegged mem/cpu at 95% (atleast what we saw on the monitors). Also we noticed degraded performance (seems slow down) with very large pull requests 1-2 hours in

Our new approach is working. I hope that Snaplogic can update how fast the Open Threads variable is updated, this will improve resource load balacing for Cloudplex users!

Thanks for the time Tim, much appreciate your looking at this

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-10-2020 08:40 AM

It would be good to point out to support the particular execution where this occurred so that we can take a closer look.

Can you point out the executions that failed/slowed-down in your support case as well. Especially the crash, that is very concerning and should be addressed.

The thread count for the nodes is updated frequently (a few times a minute). The CPU usage is smoothed out over a minute or two so short spikes are reduced. The problem is that it is hard to predict the resource impact of a pipeline or what new pipelines may be executed in the near future. There is also communication overhead when distributing child executions to neighboring nodes. Many times, a child pipeline will be executed that takes very few resources and runs very quickly. So, we don’t want to run that on a neighbor since the cost of communication negates any benefits from trying to distribute the load. Other times, like in your case, a pipeline with only a few snaps may have a large resource impact on the node and it would be good to distribute. It’s a challenging balancing act that we’re still working on. But, first, we need to make sure executions are not failing due to overload since there is no guarantee the resource scheduler will always be able to distribute executions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-10-2020 08:54 AM

It would be nice if we could specify what kind of load balancing (least used, round robin, etc.) we wanted in the PipelineExe snap, triggered task definitions, etc. I think it would also be great to have a way to define and then specify (preferred/required) logical groups of node(s) within a Snaplex to execute on, which could be dynamically specified during runtime.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-12-2020 09:18 AM

Hi Chris,

I agree.

Tim - our testing shows that the

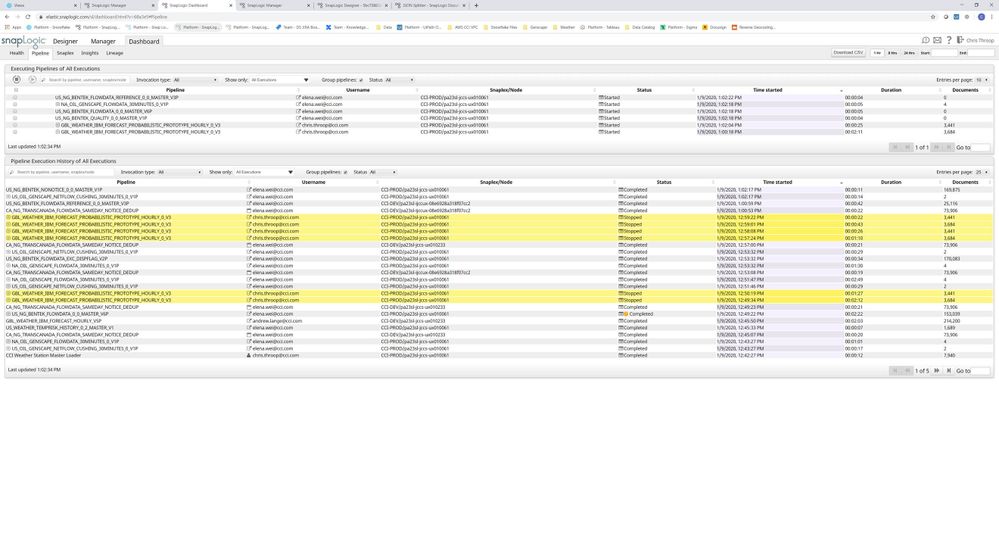

Once we get this project live, I will circle around and re-do the testing so I can send you some screen shots / runtime data on the crashes. I did send the screenshot to support, which shows how even though we have 2 nodes, one will zero pipelines, all the pipelines are being allocated to the same node over a 2 minute period

- Execute each child pipeline multiple times in Designing and Running Pipelines

- Execution task/pipeline on specific node. in Designing and Running Pipelines

- Triggered task POSTed parameters - how to view in execution statistics/logs in Designing and Running Pipelines

- "error":"Authorization loop detected on Conduit \"{urn:sap-com:document:sap:rfc:fun in Designing and Running Pipelines

- Email Azure SQL Servers Extracts in Designing and Running Pipelines