- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-03-2022 09:36 AM

I need to load tables in postgres db. I receive the data as csv files (one each for each table). Some of the tables are master data tables and the other are transaction data tables. The transaction data tables have foreign key relationship with the master data tables. Hence while uploading the data to DB, I need to make sure that the master data tables are updated first before updating the transaction data tables. However, I would like to avail the parallel processing (using child pipelines) while updating the master data tables (there are multiple master data tables that don’t have dependencies amongst themselves). Once all the master data tables are updated then I would like to start another parallel processing flow for updating multiple transaction data tables.

Please let me know the ways to achieve this.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-04-2022 07:10 PM

Thanks a lot, Pero. This helped and it worked. Thanks again!!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-04-2022 03:13 PM

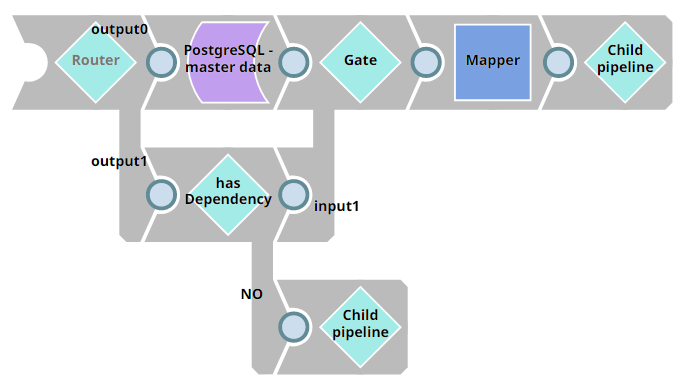

Hi @aprabhav,

There are couple of ways to achieve this and also I’m assuming that in the child pipeline you have some separate logic for transaction data and you can know which one has dependency. Fastest solution i can think of is

With the router you will separate the data between master data and transaction data, “Gate” snap will not complete executing until the execution of all upstream Snaps is done. After that in the mapper you can get only transaction data ($input1[0]), which you separate before in the router.

Thanks,

Pero M.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-04-2022 07:10 PM

Thanks a lot, Pero. This helped and it worked. Thanks again!!!