- SnapLogic - Integration Nation

- Solutions for Your Business

- How to copy the first row record in all the rows?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to copy the first row record in all the rows?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-29-2023 03:14 AM

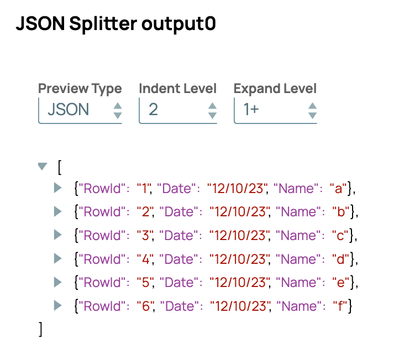

I have a file -

| RowId | Date | Name |

| 1 | a | |

| 2 | 12/10/23 | b |

| 3 | c | |

| 4 | d | |

| 5 | e | |

| 6 | f |

and I want like this -

I want to copy all the second line records in all the rows.

| RowId | Date | Name |

| 1 | 12/10/23 | a |

| 2 | 12/10/23 | b |

| 3 | 12/10/23 | c |

| 4 | 12/10/23 | d |

| 5 | 12/10/23 | e |

| 6 | 12/10/23 | f |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-30-2023 07:16 AM - edited 12-30-2023 07:17 AM

Hello,

There are a few ways to achieve this. As objects you can merge:

$.merge({Date: '12/10/23'})

Or

Grouped into an Array you can map only the second row into all fields:

Or

Hope it helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-04-2024 05:04 AM

@salonimaggo - the solution provided by @nativeBasic functionally works, but I am concerned with memory consumption when dealing with large files since grouping the entire input into an array forces the entire input set into memory. Attached is a pipeline that should meet the same requirement while keeping the data streaming or cached to disk to perform the sort for larger data sets. This is a safer approach in a shared environment.

Just for some edification, you may wonder why I am using a Sort in the top path. This is to prevent a processing deadlock for input data sets larger than 1024 records, which is the total number of documents allowed to buffer between each snap. With how I have it configured, the Aggregate snap will wait for all input documents to be consumed before sending anything to output, so without the Sort snap in the top path, the Copy will not be able to send more than 1024 documents downstream and the Join would not be able to complete because the bottom path hasn't sent any data when the Copy stops sending any data to it due to buffer full of those 1024 documents.

Go ahead and test the deadlock by removing the Sort snap and attach the Copy directly to the "Cartesian Join" - when executed, the Copy will stop sending data after 1024 documents and never complete until you force the pipeline to stop. Note that if you do this using Validate Pipeline, you will want to increase your User Settings / Preview Document Count to at least 1500 and make sure you have an input source with more than 1024 documents.

Hope this helps!

- Salesforce bulk query and bulk delete in Designing and Running Pipelines

- Slicing Data from JSON in Designing and Running Pipelines

- Need to know all the columns in input pipeline using expression in mapper snap in Designing and Running Pipelines

- Need all the columns pipeline using expression in mapper snap. in Designing and Running Pipelines

- In-memory lookup or Join functionality not working as expected. in Designing and Running Pipelines