- SnapLogic - Integration Nation

- Designing and Running Pipelines

- Re: Issues with Base64 Decode - Base64.decodeAsBin...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Issues with Base64 Decode - Base64.decodeAsBinary()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-25-2019 10:30 AM

Curious if anyone has any thoughts or can see if I’m just doing something wrong…

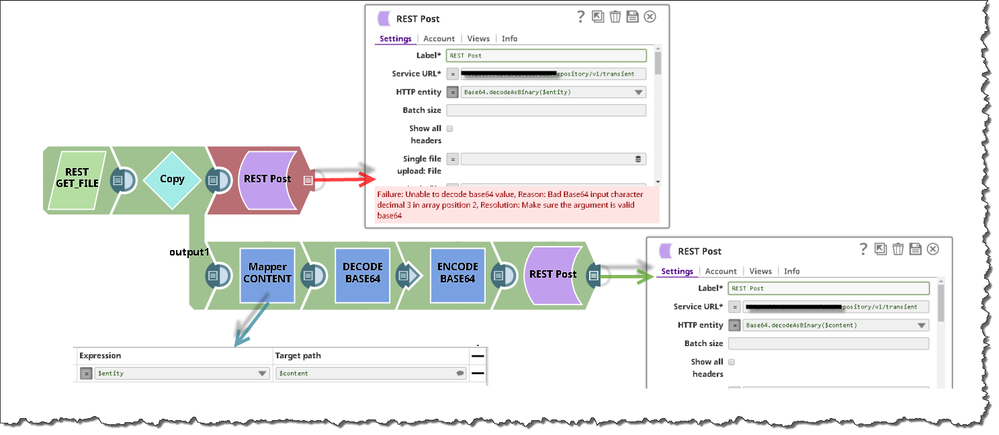

I have a pipeline that’s fetching content files from Salesforce. These are received in BASE64 format and need to be decoded to binary before posting them to an internal application. If I leave the Salesforce results as-is (BASE64) and just try to send them to the REST POST snap with an inline Base64.decodeAsBinary() if fails with a weird error about bad Base64 input characters.

However, if instead I hookup an actual Document-to-Binary (BASE64 DECODE) and then re-encode with a Binary-to-Document (BASE64 ENCODE) and then pass that to the REST POST snap still with an inline Base64.decodeAsBinary() it seems to work fine…

Seems weird… Obviously I would prefer to just do the first method, rather than decoding/encoding/decoding like the second method.

Any ideas?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-26-2019 10:59 AM

Hmm… I wonder how the DECODE BASE64 on the lower branch works then?

I’ll mess with it more.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-26-2019 11:45 AM

It might not be doing anything at all since it’s already a byte array.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-04-2019 01:15 AM

Hi @christwr , I believe that the base64 encode-decode inbuilt functions has some limitations and the encoding-decoding doesnt support all characters.

In the second approach i guess the data is not being transferred as it is supposed to be even though its working (please verify it).

my suggestion: use a script snap with python code for base64 encoding and decoding functionality.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-06-2019 07:40 AM

What limitations have you found there to be? The functions were failing in this case because they were being passed raw binary data and not base64-encoded strings.

- Decode column name that has @ as a prefix in Designing and Running Pipelines

- Getting "Failed to execute HTTP request Reason: Connection reset, Resolution: Please check the Snap" in Designing and Running Pipelines

- PGP signing using SnapLogic in Designing and Running Pipelines

- Base64 encoded in Designing and Running Pipelines

- Secret Management by Hashicrop in Designing and Running Pipelines