- SnapLogic - Integration Nation

- Designing and Running Pipelines

- Querying Multiple Tables without using multiple co...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Querying Multiple Tables without using multiple connections

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-22-2019 05:35 AM

Hi All,

I am in need of pipeline(s) querying close to 20 tables (may increase in future) and consolidate and write as flat json files in the snaplogic server which will be used by different pipelines for lookup data during processing.

The idea is to use only one or as minimal connection during the execution. Hence I am not going for that simple solution of having 20 SQL Selects and consolidate and write the file.

So, I designed a child pipeline which will accept table name, required columns (need only 4 column names from all the 20 tables) as parameters and use the same in the SQL select snap to configure the table name and the output fields. The output may be n number of rows. I need to group all of them in to one document. So I thought of using Group by N snap. But the issue is since we cant set the Target Field in Group by N field dynamically, I will not be able to differentiate the table records. Is there a way to set the Target Field Dynamically from either pipeline parameter or from input document?

I am moving this to enhancement tab as this may be a useful feature to have the value dynamically populated in the Target Field of Group by N snap.

Thanks and regards,

Sri

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2019 10:33 AM

You can actually set the target field dynamically using a pipeline parameter, but maybe was hard to find in the documentation.

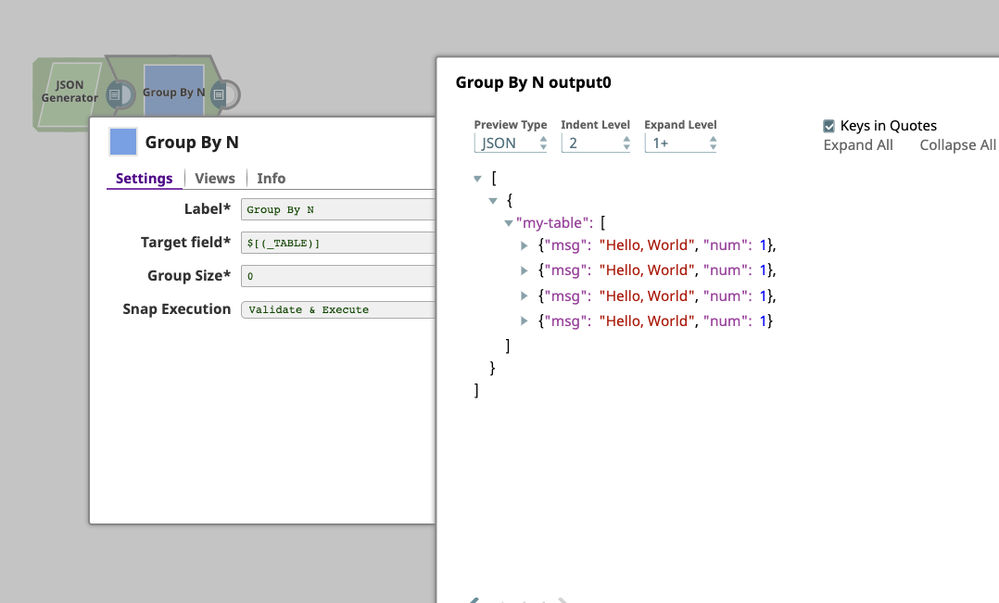

Here is an example that I think will get you on the right track. In this example, the Group By N snap is used like you mention. I set the value to the pipeline parameter _TABLE, which has the value my-table. To do so, use the syntax $[(<pipe param>)] where <pipe param> is the pipeline parameter you want to use, so in this case $[(_TABLE)]. In the preview data, you can see that all documents were aggregated under the value my-table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-26-2019 11:50 PM

Thank you very much for your response. This is indeed helpful and worked as expected. This should be added to the documentation.

Thanks and regards,

Sri

- Salesforce bulk query and bulk delete in Designing and Running Pipelines

- Slicing Data from JSON in Designing and Running Pipelines

- Mapper Snap - Preparing fixed width text datafile in Snap Packs

- Backing up two database tables to an S3 bucket as one archive in Designing and Running Pipelines

- Email Sender Snap - Multiple HTML tables in Designing and Running Pipelines